Agent-as-a-Judge is a new type of automated evaluation system designed to improve work efficiency and quality through mutual evaluation of agent systems. The product is able to significantly reduce evaluation time and cost while providing continuous feedback signals that promote self-improvement of the agent system. It is widely used in AI development tasks, especially in the field of code generation. The system has open source features, which facilitates developers to conduct secondary development and customization.

Demand population:

"Fit for AI developers, researchers and corporate teams, especially those who need to conduct project evaluation and feedback quickly and efficiently. This product can help them save time and reduce costs in complex development environments, while improving code quality and project success."

Example of usage scenarios:

Use Agent-as-a-Judge to evaluate code generation tasks to improve development efficiency.

Use this tool to automatically evaluate student projects in AI teaching to provide instant feedback.

Integrate Agent-as-a-Judge for internal development processes to achieve efficient code quality evaluation.

Product Features:

Automatic evaluation: Significant savings on assessment time and cost.

Reward Signals Provide: Continuous Feedback Promotes Self-Improvement.

Supports calls to multiple large language models (LLMs).

User-friendly command line interface for quick access.

Strong scalability and suitable for different development needs.

Open source code to support community contribution and improvement.

Integrate a variety of evaluation standards to improve evaluation accuracy.

Supports compatibility with multiple development platforms.

Tutorials for use:

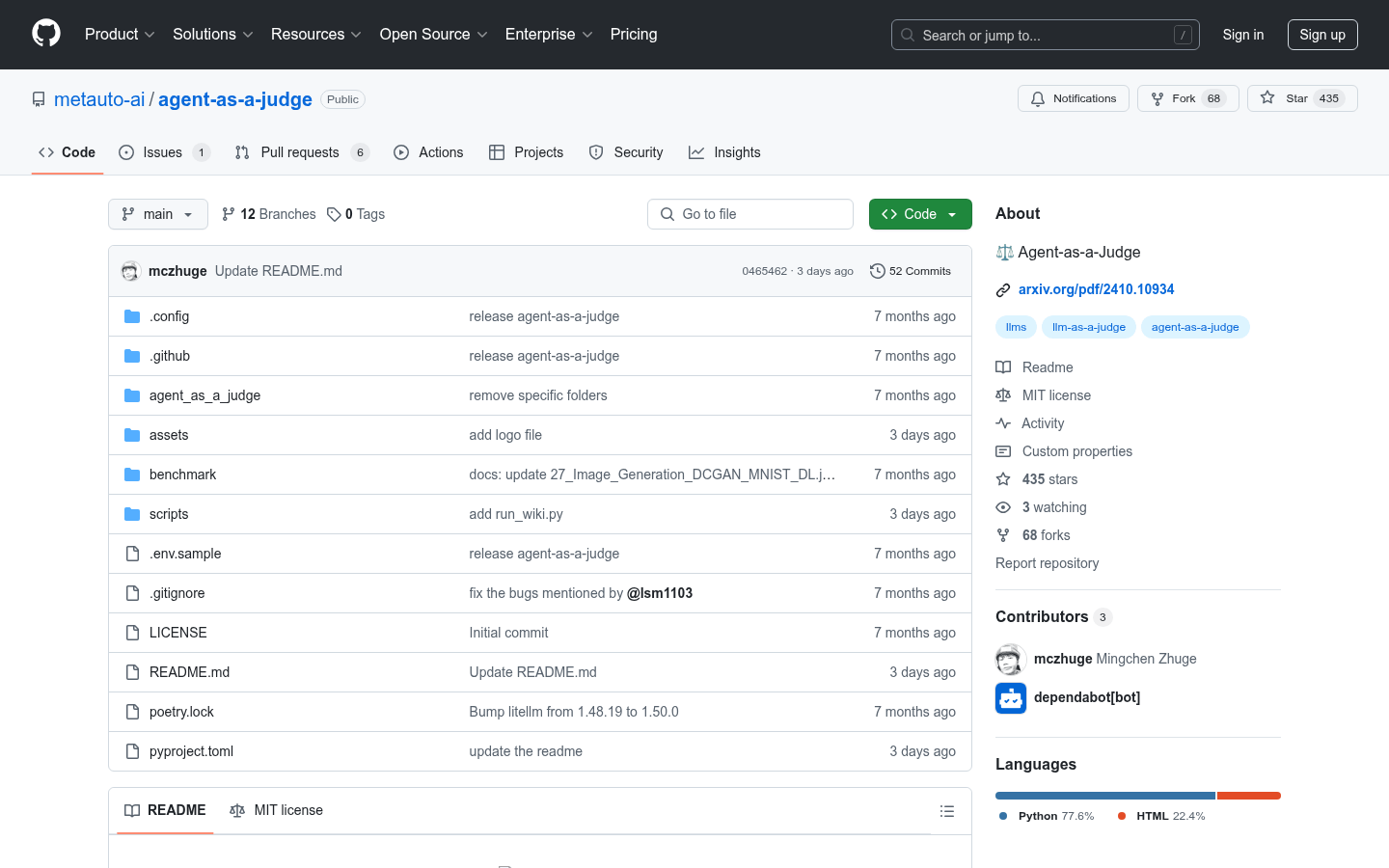

Cloning the code base: git clone https://github.com/metauto-ai/Agent-as-a-Judge.git

Create a virtual environment and activate: conda create -n aaaj python=3.11 && conda activate aaaj

Installation dependencies: pip install poetry && poetry install

Set environment variables: Rename .env.sample to .env and fill in the required API.

Run the sample script and test the function: PYTHONPATH=.python scripts/run_ask.py --workspace YOUR_WORKSPACE --question 'YOUR_QUESTION'