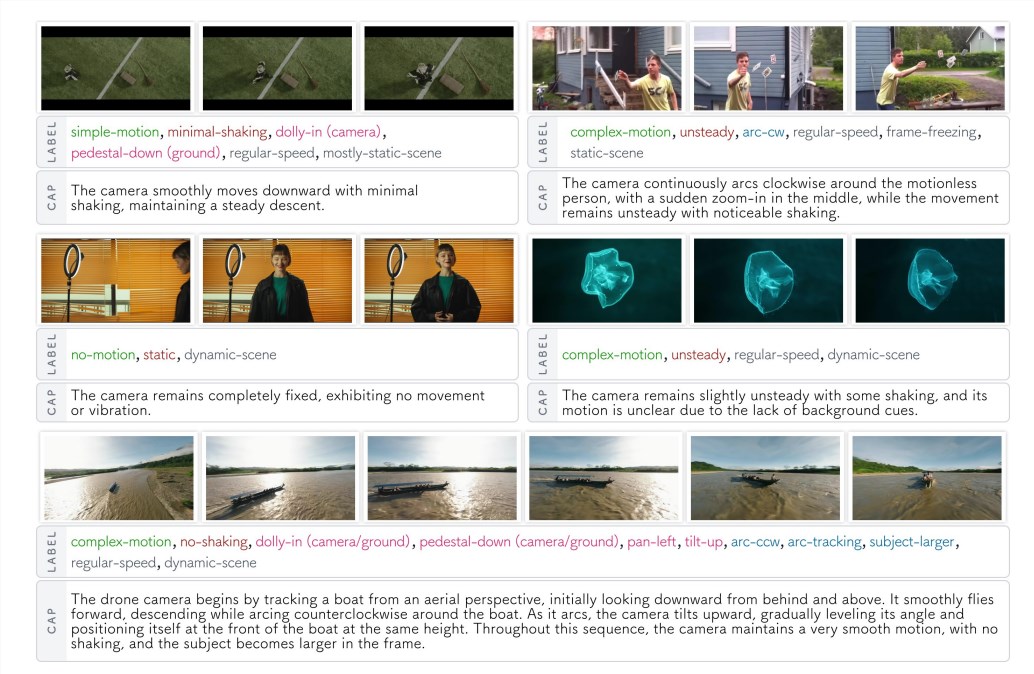

CameraBench is a model used to analyze camera movement in videos, aiming to understand the camera's motion patterns through videos. Its main advantage lies in the use of generative visual language models for principle classification of camera motion and video text retrieval. The model shows significant advantages in capturing scene semantics by comparing with the traditional structurally from motion (SfM) and real-time positioning and * construction (SLAM) approaches. The model is open source and is suitable for researchers and developers, and more improved versions will be launched in the future.

Demand population:

" CameraBench is suitable for researchers, developers and video analysis experts, especially in the fields of computer vision and image processing. These users can use CameraBench for video analysis and camera motion understanding to improve their research and project development in related fields."

Example of usage scenarios:

Use CameraBench to analyze the motion patterns of the camera in a dance video.

Use CameraBench in teaching to help students understand the relationship between camera movement and scene.

Developers use CameraBench to add camera motion recognition function to video editing software.

Product Features:

Provides a video camera motion classification.

Supports video text retrieval and description generation.

After a large amount of supervision and fine-tuning of labeled data, the performance has been significantly improved.

Integrated multiple evaluation metrics, including VQAScore.

Suitable for a variety of video analysis tasks, such as camera motion principle recognition.

Supports the use of HuggingFace's model interface for application.

Tutorials for use:

Download the test video data.

Get the tags and descriptions of the video.

Load the CameraBench model.

Use video and text input for camera motion analysis.

View the model output, including motion classification and description.