What is StackBlitz?

StackBlitz is a web-based IDE tailored for the JavaScript ecosystem. It uses WebContainers, powered by WebAssembly, to create instant Node.js environments di rectly in your browser. This provides exceptional speed and security.

---

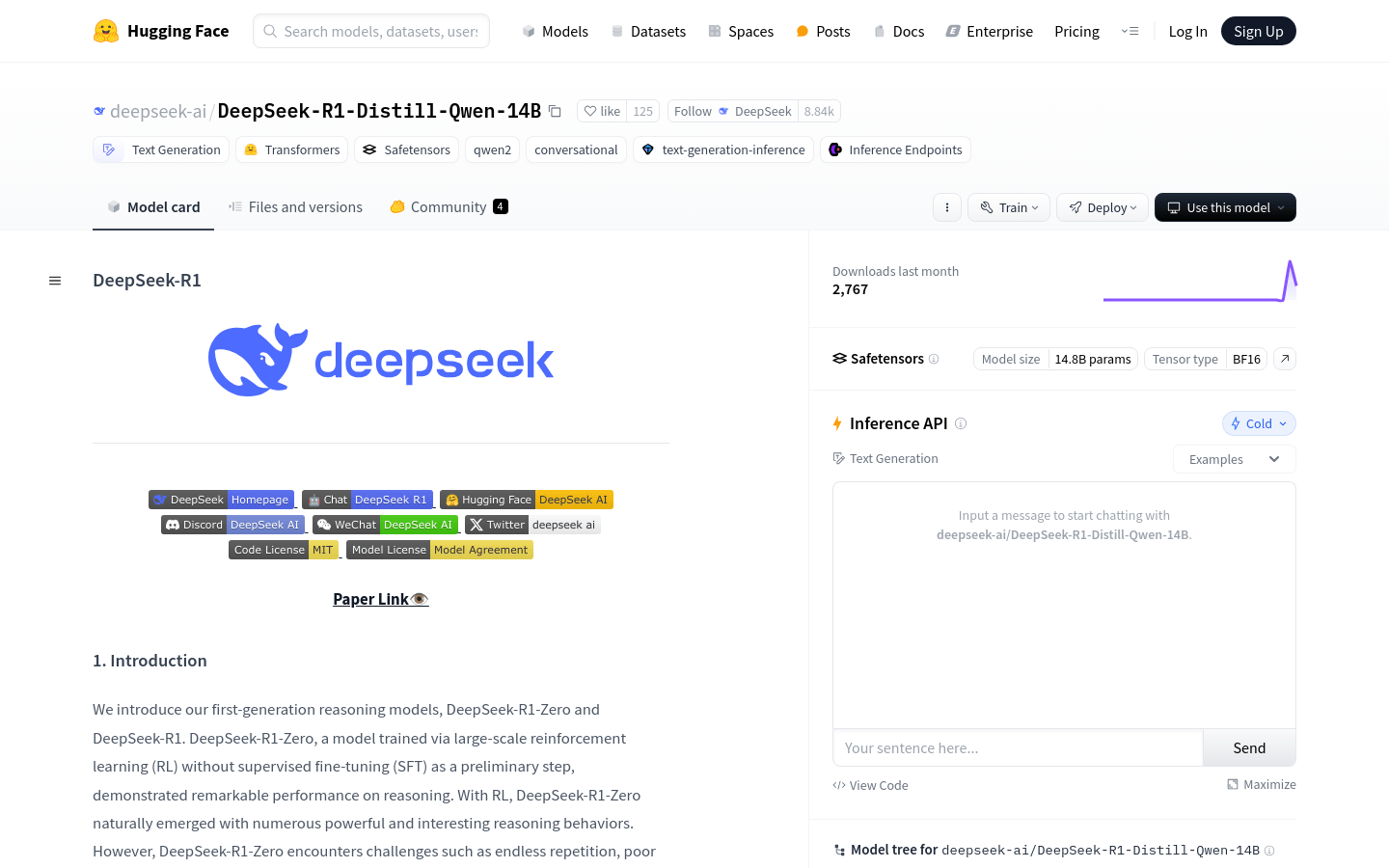

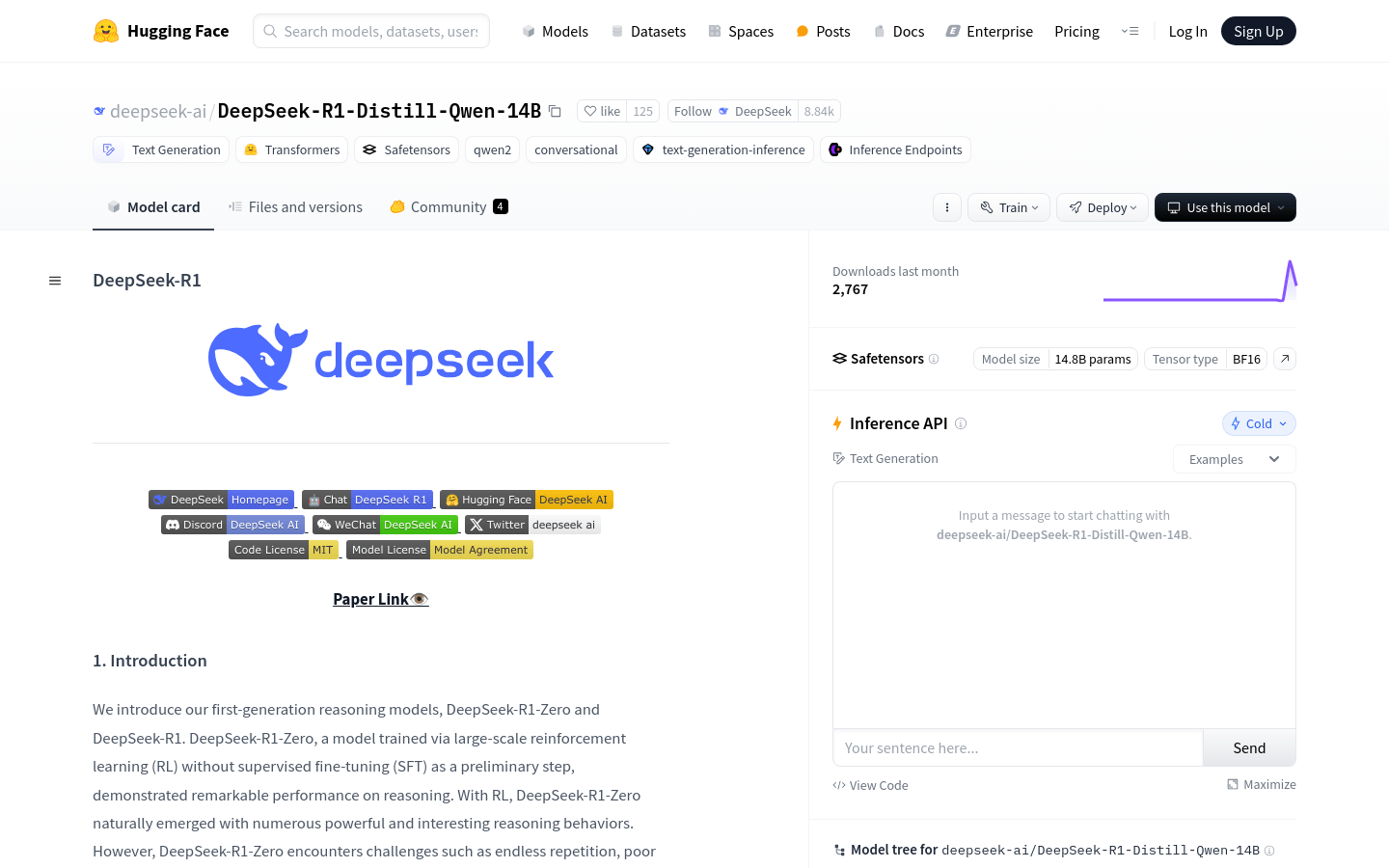

DeepSeek-R1-Distill-Qwen-14B

introduce

DeepSeek-R1-Distill-Qwen-14B is a distillation model developed by the DeepSeek team based on Qwen-14B. This model is specifically used for inference and text generation tasks. Through large-scale reinforcement learning and data distillation techniques, the model significantly improves inference capability and generation quality while reducing the demand for computing resources.

Features

High performance: Provides excellent inference and generation capabilities.

Low resource consumption: Reduces the need for computing resources.

Wide applicability: Suitable for a variety of text generation tasks, such as dialogue, code generation, mathematical reasoning, etc.

Reinforcement learning optimization: Reinforcement learning technology is used to optimize model performance.

Based on Qwen-14B distillation: performance is better than similar models.

Large generation length: Supports maximum generation length up to 32,768 tokens to meet complex tasks.

OpenAI Compatible API: Provides an interface that is easy to integrate and use.

Suitable for the crowd

Suitable for developers, researchers and enterprise users who need efficient reasoning and text generation, especially for scenarios with high performance and resource consumption, such as natural language processing, artificial intelligence research and commercial applications.

Example of usage scenario

Solve complex reasoning tasks in academic research, such as solving mathematical problems.

Provide enterprises with intelligent customer service solutions to generate high-quality conversation content.

Generate code snippets and logical suggestions in programming helpers.

Usage tutorial

1. Visit the official Hugging Face page and download the DeepSeek-R1-Distill-Qwen-14B model file.

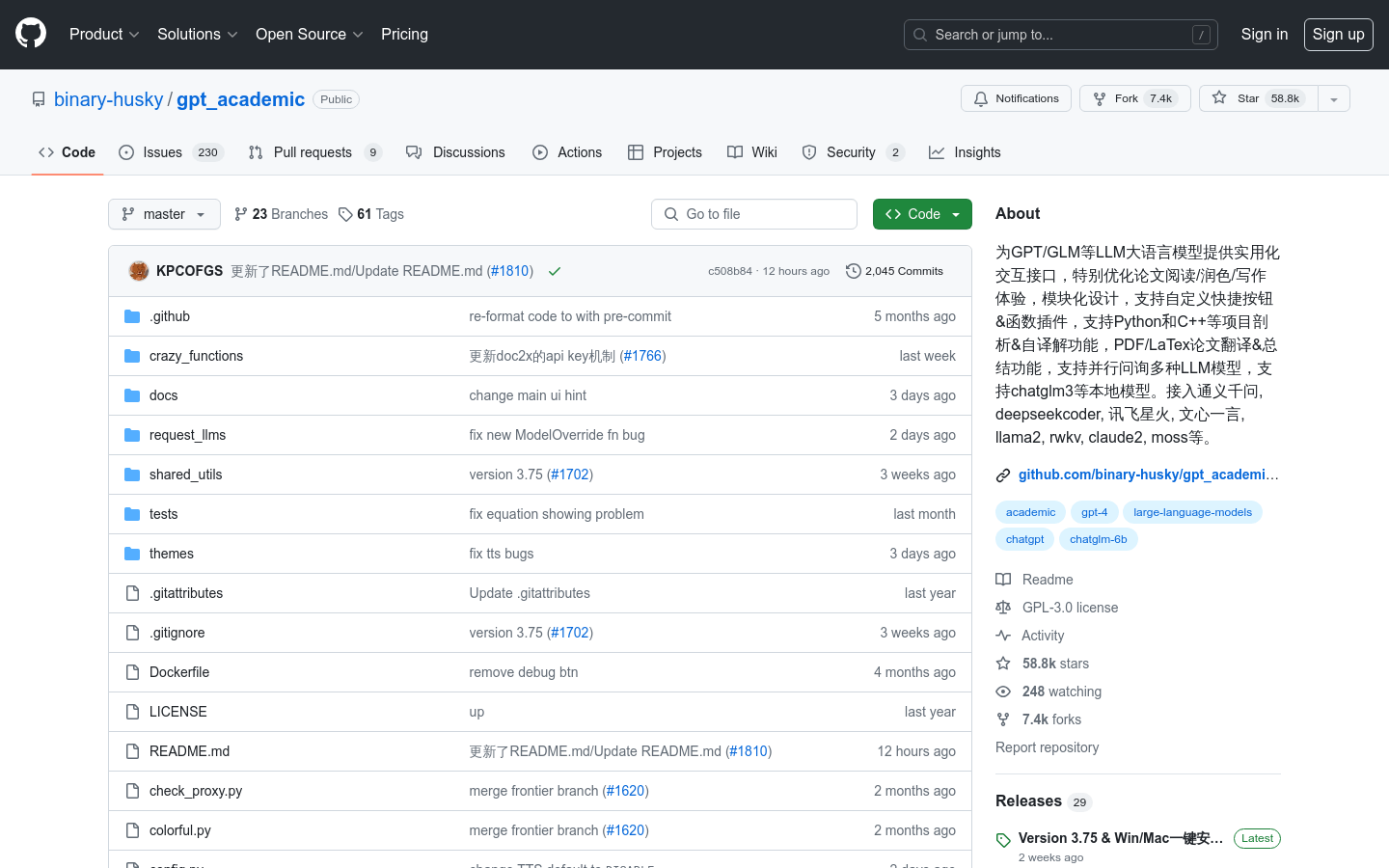

2. Install the necessary dependency libraries, such as Transformers and Safetensors.

3. Use vLLM or other inference framework to load the model and set appropriate parameters (such as temperature, maximum length, etc.).

4. Enter a prompt related to the task (Prompt), and the model will generate the corresponding text output.

5. Adjust the model configuration according to the requirements and optimize the generation effect.