FastVLM is an efficient visual coding model designed for visual language models. It reduces the encoding time of high-resolution images and the number of tokens output through the innovative FastViTHD hybrid vision encoder, making the model perform excellent in speed and accuracy. FastVLM 's main positioning is to provide developers with strong visual language processing capabilities, suitable for a variety of application scenarios, especially on mobile devices that require fast response.

Demand population:

"This product is suitable for researchers and developers working in artificial intelligence, computer vision and natural language processing, especially those who want to achieve efficient image and text interactions on mobile. FastVLM 's efficiency and flexibility make it an ideal choice for rapid iterative development."

Example of usage scenarios:

Quickly identify and describe image content in mobile applications.

Used for real-time image and text interaction functions such as smart customer service.

A combination of image understanding and language description is realized in educational software.

Product Features:

FastViTHD hybrid vision encoder: effectively reduces token output and improves coding efficiency.

Significantly shortens Time-to-First-Token (TTFT) and improves user experience.

Supports multiple variants to adapt to different application requirements and hardware configurations.

Provide mobile device-compatible reasoning capabilities to expand usage scenarios.

Includes detailed instructions and model export tools for easy development.

Tutorials for use:

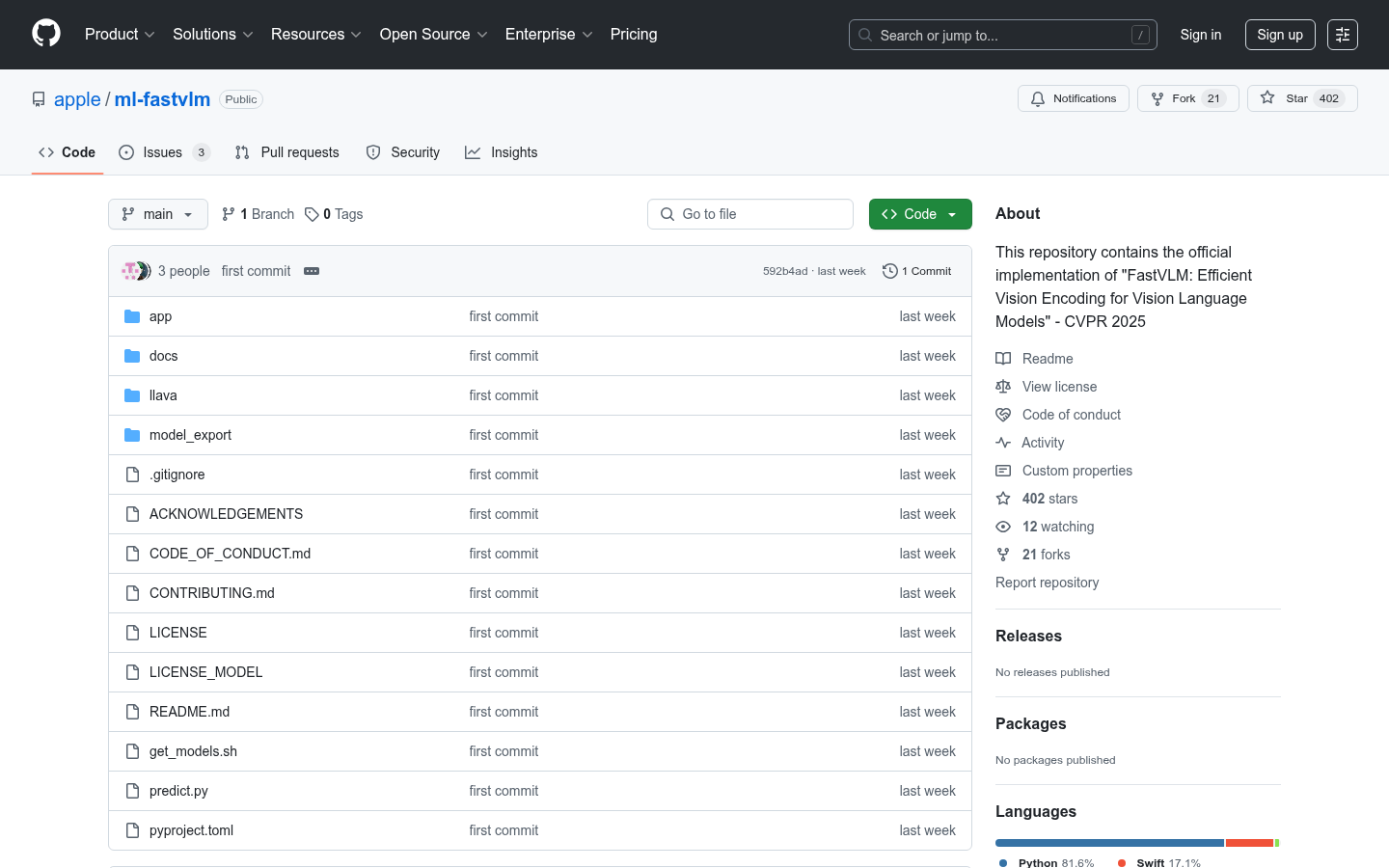

Clone or download the FastVLM code base.

Install the dependencies and create the conda environment.

Download the pre-trained model checkpoint.

Run the inference script and enter the image and prompt information.

View and analyze the results of the model output.