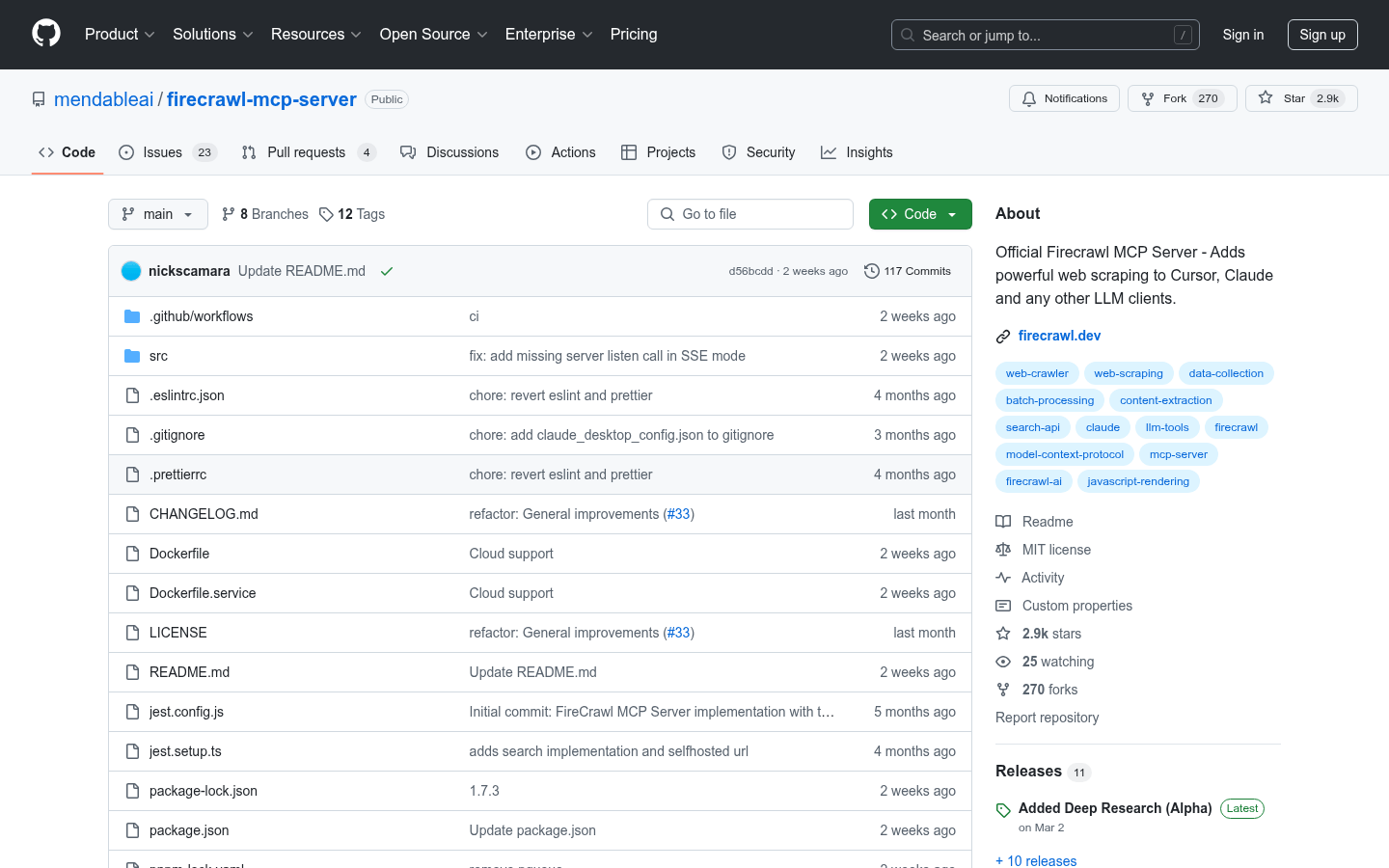

Firecrawl MCP Server is a plug-in that integrates powerful web crawling functions and supports a variety of LLM clients such as Cursor and Claude. It can efficiently crawl, search and extract web content, and provides automatic retry and traffic restrictions, which is suitable for developers and researchers. This product is highly flexible and scalable and can be used for batch grabbing and in-depth research.

Demand population:

"This product is suitable for developers, data scientists and researchers who need to extract large amounts of data from the website. Its efficient crawl capability and flexible configuration options allow users to quickly obtain the information they need, especially in scenarios where in-depth research or large-scale data collection is required."

Example of usage scenarios:

Used for data collection in academic research and obtaining a large amount of public information.

In market analysis, capture competitors' website data for analysis.

Automatically extract the latest articles from news websites and update them in real time.

Product Features:

Supports crawling, searching and extracting web page content to help users obtain the required information.

Implement JS rendering, able to crawl dynamic content and enhance the accuracy of crawling.

Supports URL discovery and crawling, can automatically process website links and improve efficiency.

It has an automatic retry mechanism and an exponential backoff strategy to ensure the stability of crawling.

Built-in traffic limits ensure efficient batch processing and avoid being banned.

Provides support for cloud and self-hosted Firecrawl instances, and flexibly choose how to use them.

A comprehensive logging system facilitates users to troubleshoot problems and analyze data.

Intelligent content filtering function allows users to granularly crawl based on tags.

Tutorials for use:

Run the command in the terminal and install Firecrawl MCP Server using npx.

Configure API keys and environment variables to facilitate connection to Firecrawl services.

Add an MCP server in the client settings and enter the relevant configuration.

Submit crawl requests through Composer or the corresponding interface.

View logs and results, perform data analysis and application.