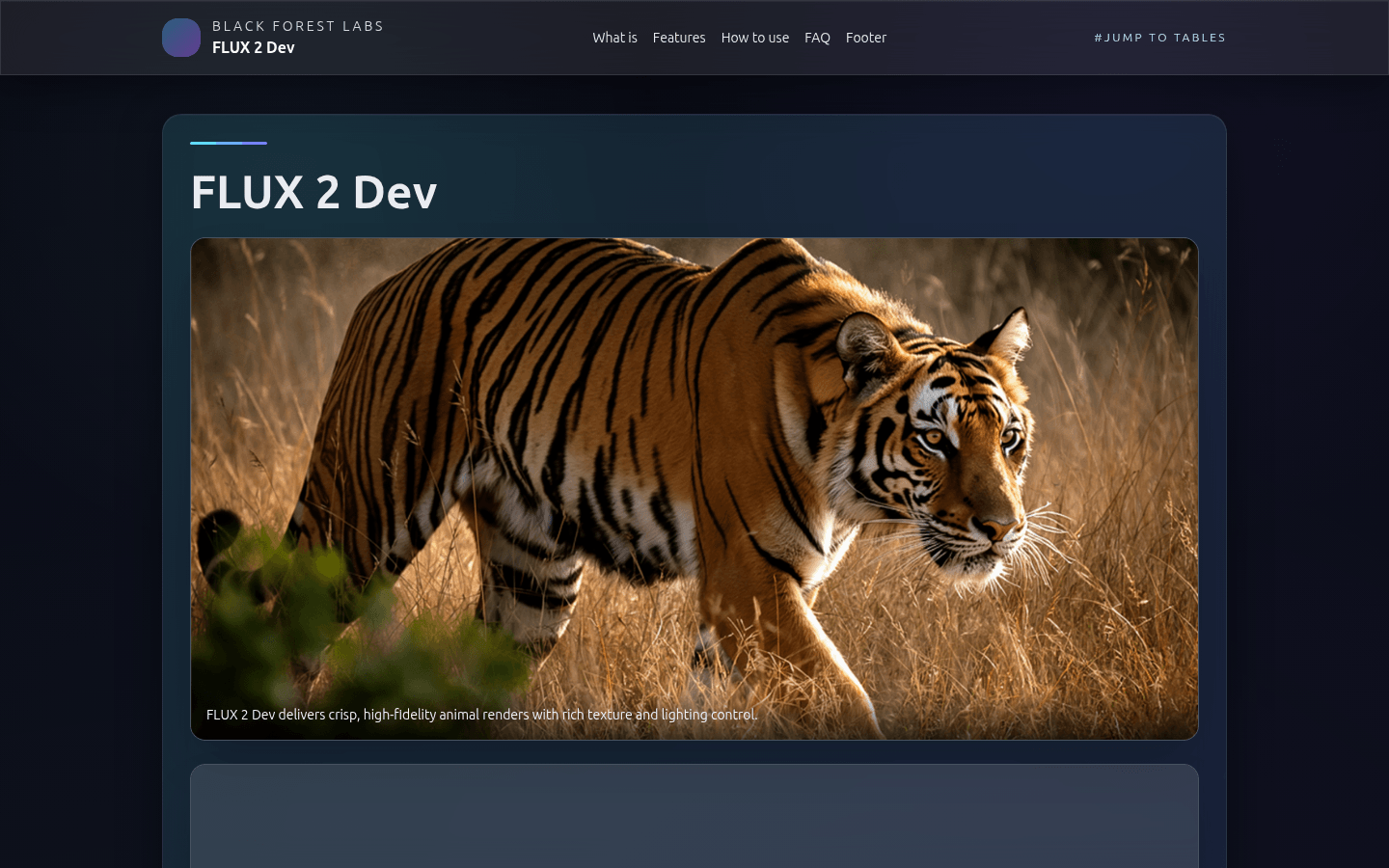

Flux 2 Dev is an open source weight 32 billion parameter rectified flow Transformer model launched by Black Forest Labs for image generation and editing. Its core strengths include delivering cutting-edge quality open source weights for production-grade image generation, supporting multi-reference editing to maintain character style and brand consistency, having a 32K token long-context VLM to handle detailed cues and layouts, and designing quantized variants for RTX edge and cloud. The model combines a rectified flow Transformer, high-resolution VAE, long-context VLM, and an adaptive scheduler to achieve outstanding performance in quality and speed. The price is not mentioned, but it is positioned to provide teams with high-quality image generation and editing solutions to help quickly deliver high-quality visual effects.

Demand group:

["Advertising creative team: Flux 2 Dev supports multi-reference editing, which can ensure the consistency of characters, brands and styles, helping the team to quickly generate high-quality advertising materials, such as hero banners, product renderings, etc.", "3D Concept Artist: Its high-resolution output and long-context VLM capabilities can meet the artist's requirements for detail and accuracy and are used to create 3D concept art works.", "Rapid Prototype Developer: The characteristics of efficient reasoning and flexible deployment enable developers to quickly iterate prototypes, saving time and cost.", " "Cloud service provider: Supports cloud services such as Cloudflare Workers AI, which enables edge deployment inference and provides high-quality image generation solutions for cloud service providers.", "Image editing enthusiasts: Open source weights and rich functions provide a platform for image editing enthusiasts to explore and practice to meet their creative needs."]

Example of usage scenario:

Advertising creativity: Generate hero banners, product renderings, etc. required for advertising to ensure consistent brand style.

3D Concept Art: Create 3D concept art pieces with high resolution and detail.

Rapid prototyping: Rapidly generate prototype images for verification and iteration during product development.

Product features:

Multi-Reference Editing: Allows blending of up to 10 reference images to ensure consistency of character, brand and style in a single checkpoint, helping to maintain a unified visual style across different scenes.

High-resolution output: Able to generate images up to 4MP (4K level), with improvements in text rendering, lighting, hand and facial representation, to meet high-end imaging needs.

Efficient inference: Use rectified flow sampling and guided distillation technology to reduce inference steps and guide proportions, achieve faster iterations, and improve work efficiency.

Long Context VLM: A visual language encoder with 32K tokens capable of following long prompt, layout, and hex color instructions for more detailed image generation.

Flexible deployment: It can be run through Hugging Face, Cloudflare Workers AI, RTX FP8/FP4 pipelines and ComfyUI templates to adapt to different deployment environments.

Ecosystem support: Supports Diffusers integration, quantitative variants, control prompts, and extended APIs to facilitate integration with other tools and expand functionality.

Adaptive scheduling: Using custom rectification flow scheduling, there are fewer steps in the draft stage. Guided distillation integrates guide information into weights and adaptively adjusts steps to meet different needs.

Local editing: Local editing is achieved through prompt embedding and image masking. Combined with multi-image input and control prompts, local adjustments such as depth, posture, segmentation, etc. can be performed.

Usage tutorial:

1. Use on Hugging Face: Import the necessary libraries, such as torch and diffusers; load the pre-trained Flux2Pipeline model; set the device, data type and warehouse ID; define parameters such as prompts, inference steps, and guidance ratios; generate images and save them.

2. Deploy on Cloudflare Workers AI: Perform edge deployment inference and leverage its edge computing capabilities to achieve low latency and global coverage.

3. Optimize performance: Performance can be optimized through methods such as quantization (such as 4-bit variants), weight flow, and guided distillation, and appropriate optimization strategies can be selected according to different GPUs and requirements.

4. Multi-image input: When it is necessary to maintain character style and brand consistency, input 2-10 reference images for multi-reference editing.

5. Local editing: Use hint embedding and image masking for local editing, combined with control hints (such as depth, pose, segmentation) to achieve finer adjustments.