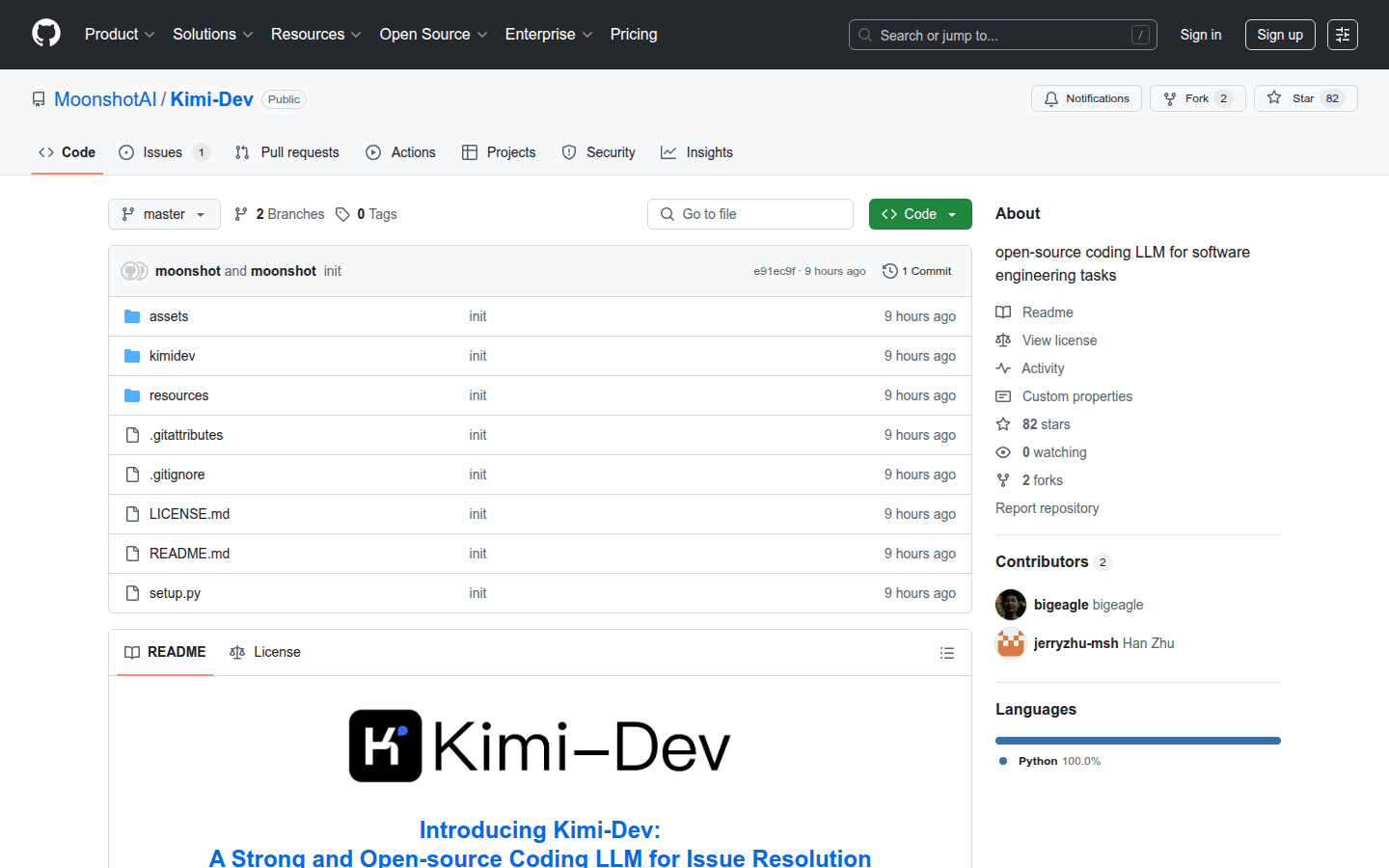

Kimi-Dev is a powerful open source coded LLM designed to solve problems in software engineering. It ensures correctness and robustness in a real development environment through large-scale reinforcement learning optimization. Kimi-Dev -72B achieves 60.4% performance in SWE-bench verification, surpassing other open source models and is one of the most advanced coding LLMs at present. The model can be downloaded and deployed on Hugging Face and GitHub, and is suitable for developers and researchers.

Demand population:

"This product is suitable for software engineers, developers and researchers, and can help them solve coding problems efficiently and improve development efficiency. Due to its open source features, users can freely modify and expand functions according to their needs."

Example of usage scenarios:

Use Kimi-Dev to resolve bugs in open source projects and automatically generate test cases.

During the software development process, use Kimi-Dev to improve the quality and reliability of your code.

As a teaching tool, helps computer science students understand solutions to coding problems.

Product Features:

Automatically repair code: Ability to describe intelligently locate and fix errors in the code based on the problem.

Unit test generation: Automatically generate relevant unit tests for code to improve code quality.

High performance optimization: Through reinforcement learning, ensure that the model's repair effect meets the real development standards.

Easy to use deployment: supports easy deployment and use on-premises and cloud.

Strong community support: open source, encouraging developers and researchers to contribute and improve.

Tutorials for use:

Clone Kimi-Dev repository: Use the git clone command to download the project.

Create and activate the environment: Use conda to create a virtual environment and activate it.

Install dependencies: Run the pip install command to install the necessary dependencies.

Prepare the project structure: download and decompress the processed data.

Deploy the vLLM model: Deploy the model using the vllm serve command.

Run the repair script: Run the bugfixer or testwriter script as needed for code repair or test writing.