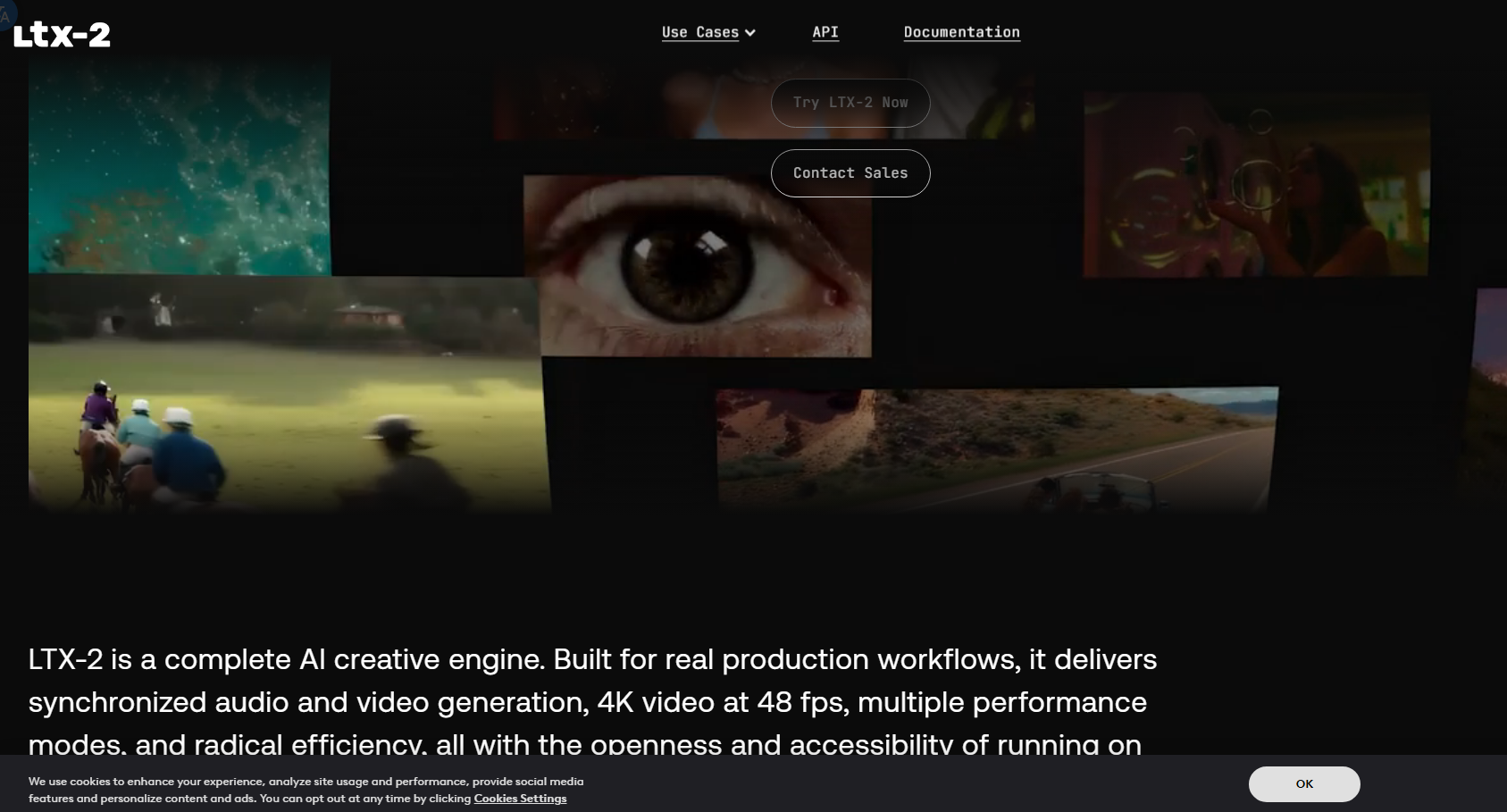

LTX-2 is an open source AI video generation model launched by Lightricks. Based on diffusion technology, it can convert static images or text prompts into controllable high-fidelity video sequences. It supports simultaneous audio and video generation, optimizing customization, speed and creative flexibility, and is designed to provide studios, research teams and independent developers with convenient creative tools. LTX-2 performance can run efficiently on consumer-grade GPUs, greatly reducing the cost of professional video production.

Demand group:

" LTX-2 is ideal for video producers, animators, and creative workers because it can quickly produce high-quality videos, simplify the creative process, and reduce costs."

Example of usage scenario:

Transform static concept art into dynamic animated scenes.

Generate animated clips or trailers for your game, directly from sketches.

Repair and restore older footage to preserve original creative intent.

Product features:

Generate videos from text or images: Users can generate short or long videos by describing scenes or uploading images.

Synchronous generation of audio and video: Generate corresponding sounds, music and dialogue at the same time to ensure the integrity of the video.

High-resolution support: Can generate videos up to 4K resolution, suitable for professional production needs.

Flexible creative control: Supports multiple keyframe conditions and contextual controls, allowing users to precisely guide movement and style.

Integrated workflow: Seamlessly connects to editing software, broadcast tools and VFX pipelines to increase productivity.

Open source: Open weights and training code will be released soon, allowing users to freely customize and extend.

Efficient performance: Optimized inference speed for consumer-grade GPUs, supporting rapid iteration.

Usage tutorial:

Visit the LTX-2 ’s official website or GitHub page.

Download the required code and model weights.

Set up the development environment according to the instructions in the documentation.

Upload your image or enter a text prompt to select your desired output settings.

Run the build process to get your video and audio output.