What is MedTrinity-25M?

MedTrinity-25M is a large-scale multimodal dataset containing medical annotations at various levels of granularity. Developed by multiple authors, it aims to advance research in medical image and text processing fields. The dataset includes steps like data extraction and generating detailed textual descriptions, supporting tasks such as visual question answering and pathology image analysis.

Who can use MedTrinity-25M?

MedTrinity-25M is primarily aimed at researchers and developers working in medical image processing and natural language processing. It offers extensive medical image and text data to support model training, algorithm testing, and the development of new methods.

Example Scenarios

Researchers can train deep learning models to identify lesions in medical images using MedTrinity-25M.

Developers can build systems that automatically generate medical image reports with this dataset.

Educational institutions can use MedTrinity-25M as teaching material to help students understand the complexities of medical image analysis.

Key Features

Data Extraction: Extracts key information from collected data, including metadata integration for coarse-grained titles, region localization, and medical knowledge collection.

Multigranularity Text Description Generation: Uses this information to prompt large language models to generate fine-grained annotations.

Model Training and Evaluation: Provides scripts for model training and evaluation, supporting pre-training and fine-tuning on specific datasets.

Model Library: Offers various pre-trained models like LLaVA-Med++, which can be fine-tuned for specific medical image analysis tasks.

Quick Start Guide: Includes detailed installation and usage instructions to help users get started quickly.

Paper Release: Related research findings have been published on arXiv, providing detailed background and methodology.

Community Support: Acknowledges support from several research and cloud computing projects, offering computational resources for dataset development and research.

Getting Started Tutorial

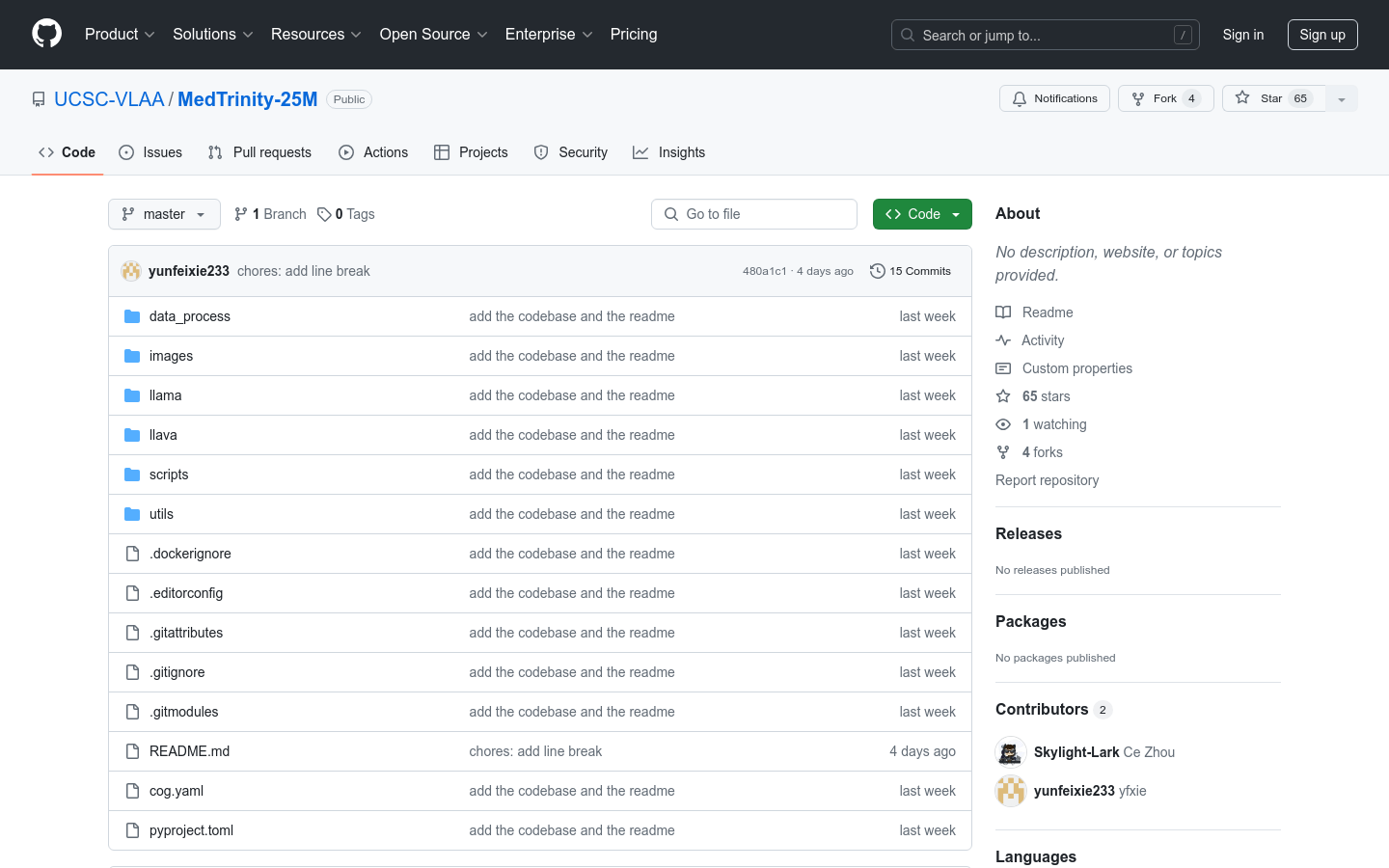

1. Visit the GitHub page and clone the MedTrinity-25M dataset locally.

2. Install necessary software packages and dependencies based on the quick start guide.

3. Download and install the base model LLaVA-Meta-Llama-3-8B-Instruct-FT-S2.

4. Use provided scripts to perform pre-training and fine-tuning of models.

5. Evaluate trained models' performance using assessment scripts.

6. Customize algorithm development and testing according to research needs.