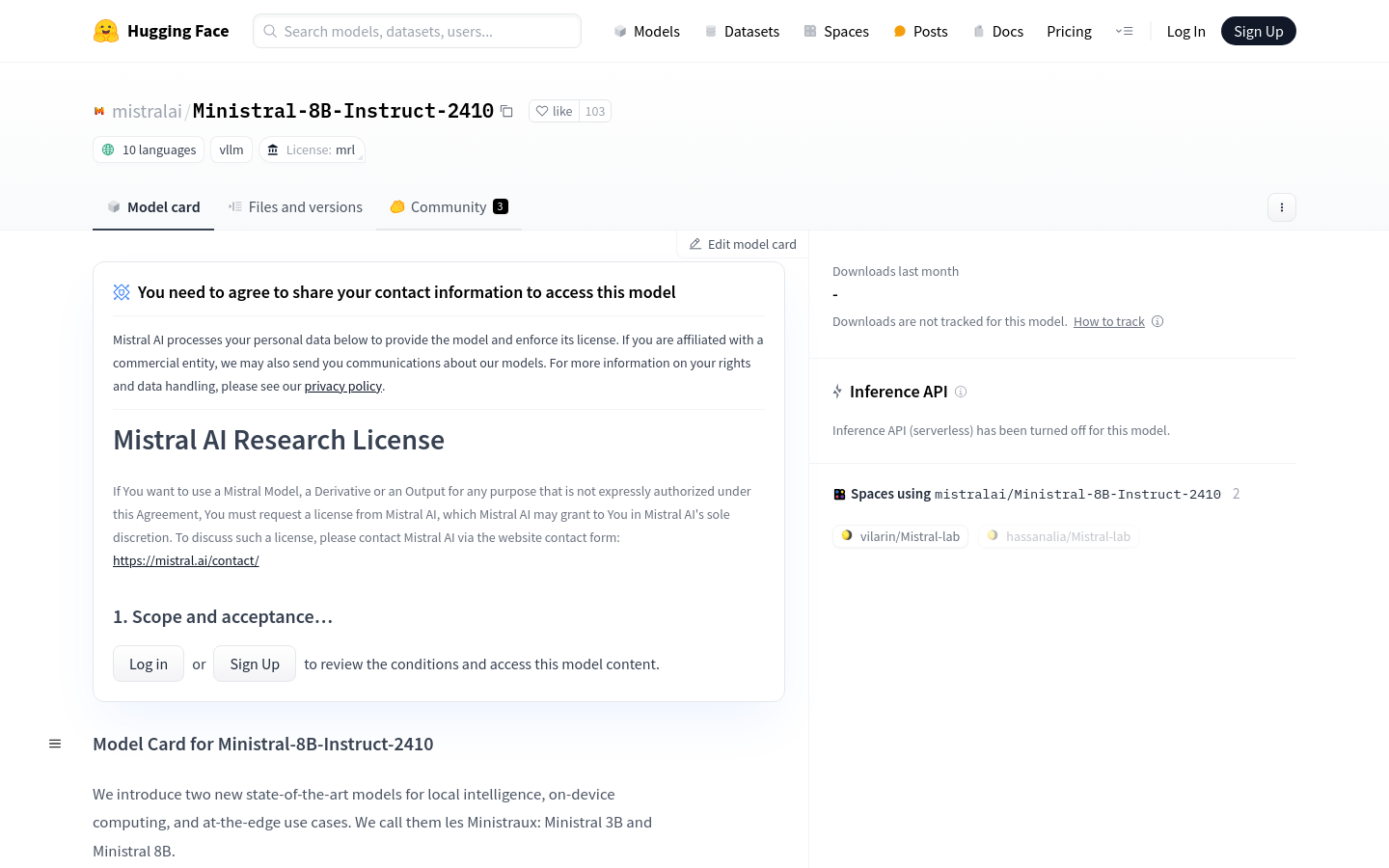

Ministral-8B-Instruct-2410 model introduction

Ministral-8B-Instruct-2410 is a large-scale language model developed by Mistral AI, specially designed for local smart device-side computing and edge usage scenarios. It performs well among models of its size.

Model features

Supports 128k context window and staggered sliding window attention mechanism

Get trained on multiple languages and code data

Support function calls

Vocabulary reaches 131k

Performs well on benchmarks such as Knowledge, Common Sense, Code Mathematics, and Multilingual Support

Especially good at handling complex conversations and tasks

target users

Researchers, developers, and businesses need high-performance language models to handle complex natural language processing tasks, such as language translation, text summarization, question answering systems, and chatbots. It is especially suitable for scenarios that require computing on local devices or edge environments to reduce dependence on cloud services and improve data processing speed and security.

Usage scenarios

Build production-ready inference pipelines using the vLLM library

Chat or Q&A in a server-client environment

Use mistral-inference to quickly test model performance

Processing tasks with over 100k tokens

Tutorial

1 Install the vLLM library and mistralcommon library using the pip command pip install --upgrade vllm pip install --upgrade mistralcommon

2 Download the model from Hugging Face Hub and use the vLLM library for inference

3 Set SamplingParams for example the maximum number of tokens

4 Create an LLM instance and provide the model name, tokenizer mode, config format and load format.

5 Prepare input prompts to be passed to the LLM instance as a list of messages

6 Call the chat method to get the output results