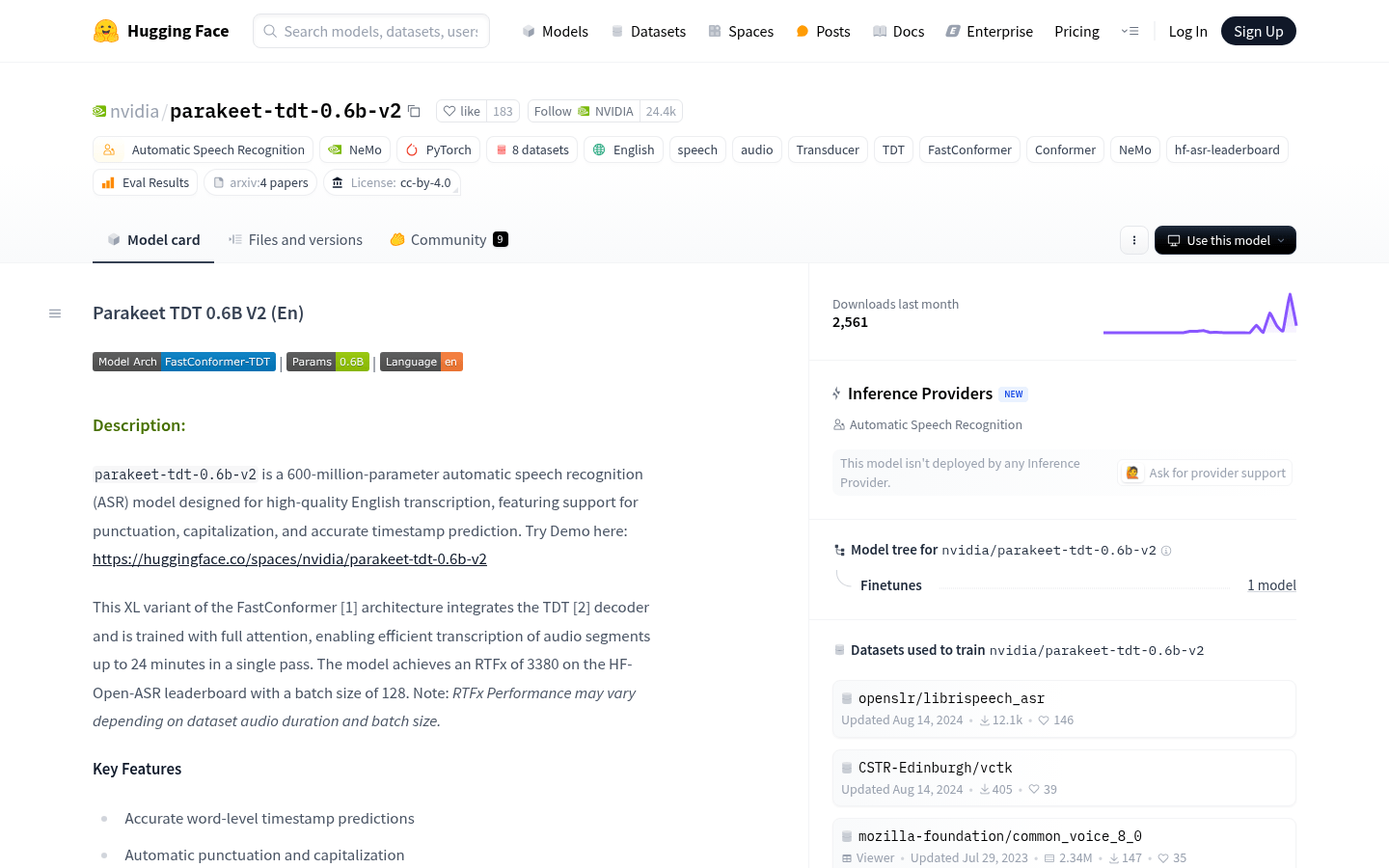

parakeet-tdt-0.6b-v2 is a 600 million parameter automatic speech recognition (ASR) model designed to achieve high-quality English transcription with accurate timestamp prediction and automatic punctuation and case support. Based on the FastConformer architecture, the model is capable of efficiently processing audio clips up to 24 minutes, suitable for developers, researchers and applications in various industries.

Demand population:

"This product is suitable for developers, researchers and industry professionals, especially teams that need to build voice-to-text applications. The high accuracy and flexibility of parakeet-tdt-0.6b-v2 makes it an ideal choice for voice recognition."

Example of usage scenarios:

Used for real-time transcription in voice assistants.

Implement text recording of classroom lectures in educational applications.

Automatic transcription tool for conference record and summary generation.

Product Features:

Accurate word-level timestamp prediction: Provides detailed timestamp information for each word.

Automatic punctuation and case: Enhance the readability of transcript text.

Powerful performance for spoken numbers and lyrics: The ability to accurately transcribe numbers and lyrics content.

Supports 16kHz audio input: compatible with mainstream audio formats such as .wav and .flac.

Ability to process audio up to 24 minutes: transcribing long audio at one time for improved efficiency.

Supports running on a variety of NVIDIA GPUs: Optimize performance and provide faster training and inference speeds.

It can be used in a variety of application scenarios: suitable for conversational AI, voice assistants, transcription services, subtitle generation, etc.

Tutorials for use:

Install the NVIDIA NeMo toolkit to ensure that the latest PyTorch version is installed.

Download the model with the following command: import nemo.collections.asr as nemo_asr; asr_model = nemo_asr.models.ASRModel.from_pretrained (model_name='nvidia/ parakeet-tdt-0.6b-v2 ')

Prepare 16kHz audio files, support .wav and .flac formats.

Call the model for transcription, using: output = asr_model.transcribe (['audio file path']).

If a timestamp is required, add the parameter: output = asr_model.transcribe ([' audio file path'], timestamps=True).

Process transcription output as needed, perform text analysis or storage.