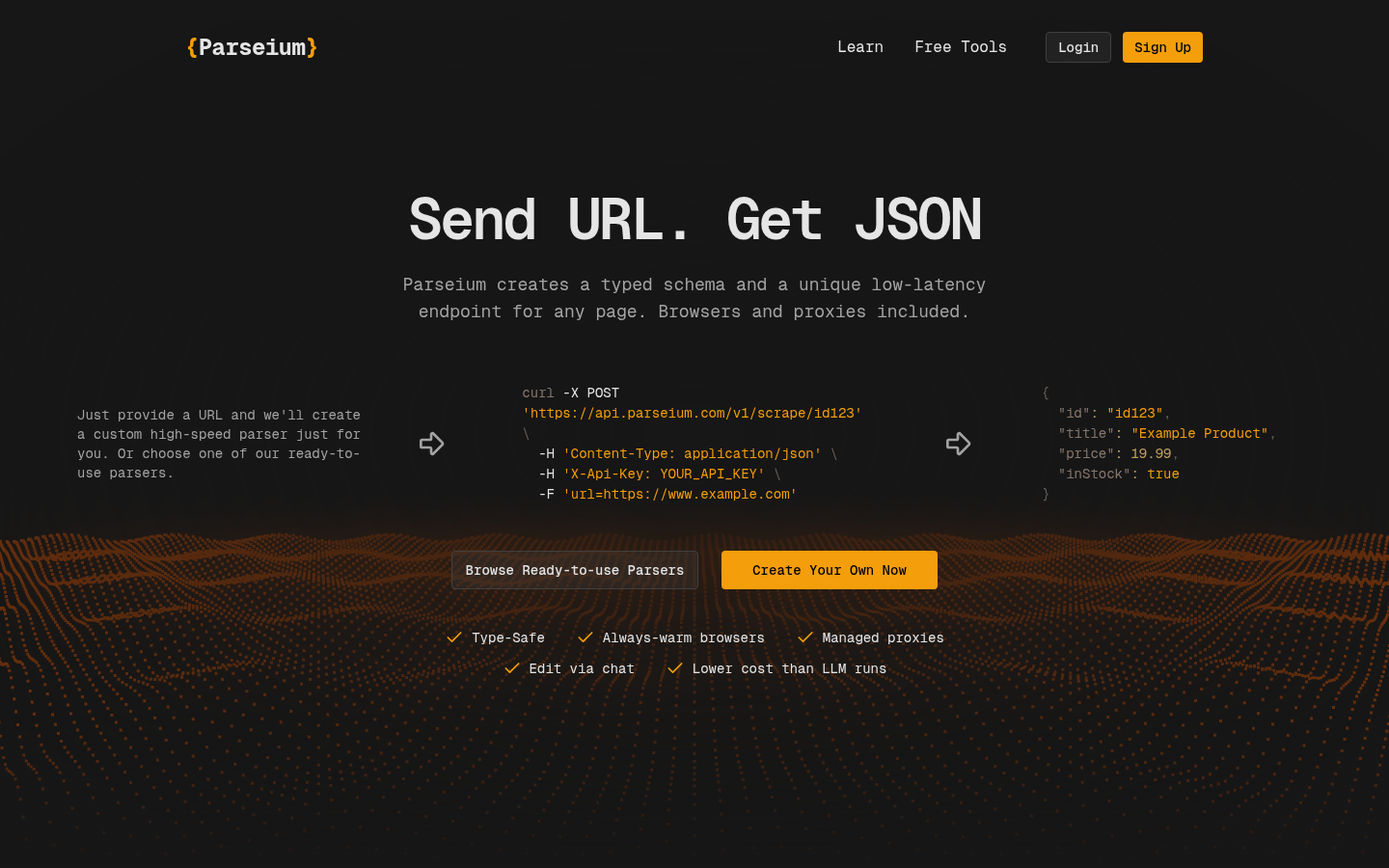

Parseium is an AI-powered web scraping and data extraction platform. Its importance lies in its ability to help users easily extract the required data from various complex websites and transform it into structured data form. The main advantages include that no coding is required, and users can quickly build customized web crawlers through artificial intelligence technology; it supports integration with applications through APIs to facilitate further processing and use of data. In terms of product background, with the explosive growth of Internet data, enterprises and individuals have an increasing demand for efficient and convenient data extraction tools. Parseium was born to meet this demand. Regarding the price, it is not mentioned in the document. It is speculated that there may be a free trial or paid model. The product is positioned to provide a simple and efficient solution for users with data extraction and processing needs.

Demand group:

["Data analysts: Data analysts need to collect and organize data from various websites. Parseium 's no-coding and powerful data extraction functions can help them quickly obtain the data they need and convert it into structured data forms to facilitate data analysis and visualization work.", "Enterprise market researchers: Enterprise market researchers need to understand competitor information, market trends, etc. Parseium can help them extract relevant data from the Internet to provide strong support for corporate decision-making.", "Developers: When developing applications, developers may need to obtain data from the website. Parseium 's API integration function can help them quickly and easily integrate website data into their own applications to improve development efficiency."]

Example of usage scenario:

Enterprise market research: Enterprises can use Parseium to extract product information, price information, customer reviews and other data from competitors' websites to better understand market dynamics and competitor situations.

Data research institutions: Data research institutions can use Parseium to extract relevant data from various academic websites and news websites for data analysis and research.

E-commerce platform: The e-commerce platform can use Parseium to extract product information from supplier websites and update its own product database to ensure the accuracy and timeliness of product information.

Product features:

Use artificial intelligence technology to build custom web crawlers: Parseium allows users to use advanced artificial intelligence technology to build personalized web crawlers according to their own specific needs without writing complex code, greatly saving development time and costs.

Extract data from complex websites: Whether it is a dynamic website with a complex structure or a website with an anti-crawler mechanism, Parseium can extract the required data stably and accurately, ensuring the integrity and accuracy of the data.

Convert websites into structured data: Parseium can process and organize the raw data extracted from the website into structured data that is easy to analyze and use, making it convenient for users to perform subsequent data processing and analysis.

Integration with applications through API: Users can quickly integrate the data extracted Parseium into their own applications through the API interface to achieve real-time updating and sharing of data and improve work efficiency.

Provides a variety of free tools: The platform provides a series of free tools, such as HTTP request analyzer, link extractor, meta tag extractor, table extractor, etc., to help users perform data extraction and analysis more comprehensively.

Usage tutorial:

1. Visit the official Parseium website (https://www.Parseium.com), register and log in to your account.

2. Depending on your needs, choose the appropriate free tool or use artificial intelligence capabilities to build a custom web crawler.

3. Configure the parameters of the web crawler, such as the URL of the target website, the data fields that need to be extracted, etc.

4. Start the web crawler and wait for the data extraction to complete.

5. Convert the extracted data into structured data and integrate it into your own application through API interface for further processing and analysis.