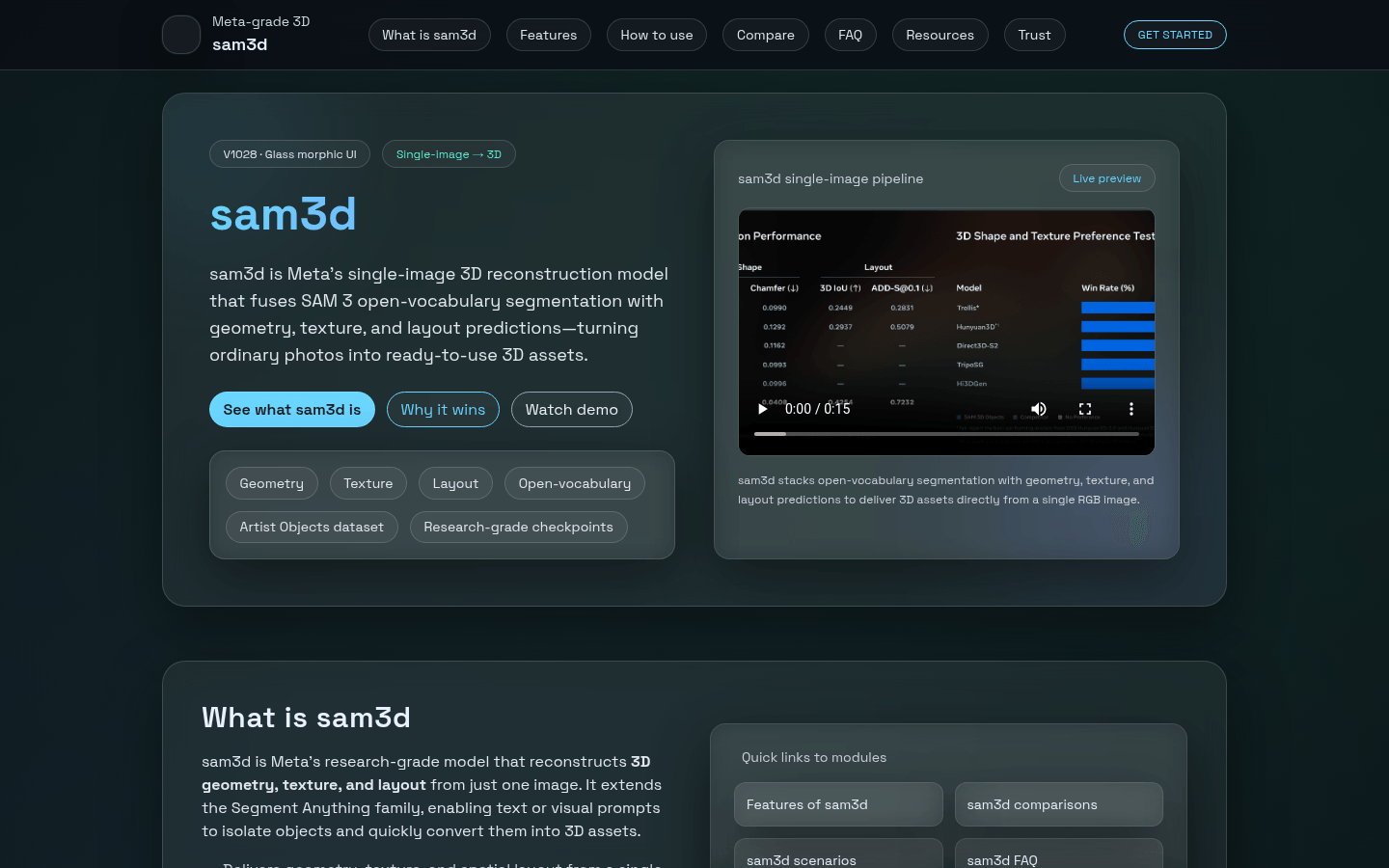

Sam3D is a research-grade single-image 3D reconstruction model launched by Meta. It integrates SAM 3's open vocabulary segmentation with geometry, texture and layout prediction, and can generate 3D assets directly from a single RGB image. The model features open source checkpoints, inference code, and benchmark datasets to facilitate repeatable research and production pilots. Its importance lies in reducing the hardware and setup complexity of 3D reconstruction and improving reconstruction efficiency. Key advantages include single image input, open vocabulary segmentation, open ecosystem, suitable for XR, efficient input and clear evaluation suite. The product is free and open source, and is positioned in the fields of creative tools, e-commerce AR shopping, robot perception and scientific visualization.

Demand group:

["Creative producers: Can scan products or props from a single photo and then refine them in Blender or a game engine to accelerate the production of games, CGI and social content.", "E-commerce practitioners: Use a single product photo to implement the "view in the room" function, and instantly render products in the AR viewer through SAM 3 segmentation and Sam3D reconstruction to enhance the shopping experience.", "Robot R&D team: When depth information is lacking, shape and free space are inferred from camera images to provide 3D prior knowledge for robot perception, supplementing the LiDAR perception stack.", "Medical and scientific researchers: Convert 2D scanning or microscope images into 3D form for inspection, fine-tune Sam3D for anatomy, biology or laboratory fields to assist scientific research work."]

Example of usage scenario:

Creative Production: Scan products or props from a single photo and refine them in Blender or a game engine to accelerate the production of games, CGI and social content.

E-commerce AR shopping: Use a single product photo to implement the "view in the room" function, and instantly render the product in the AR viewer through SAM 3 segmentation and Sam3D reconstruction.

Robot perception: In the absence of depth information, shape and free space are inferred from camera images to provide 3D prior knowledge for robot perception and complement the LiDAR perception stack.

Product features:

Single-image 3D Inference: The ability to infer complete 3D shapes, textures and layouts from a single RGB photo, replacing multi-view and LiDAR setups in many workflows, greatly simplifying the data acquisition process.

Open vocabulary segmentation: Use SAM 3's text, point, and box prompts to isolate objects and generate targeted 3D assets based on natural language or visual cues for more flexible object extraction.

Open ecosystem: Provides checkpoints, inference code, and benchmark data sets, such as Artist Objects and SAM 3D Body, to facilitate repeatable research and production pilots, and promote exchanges and collaborations between academia and industry.

XR Ready: Provides support for AR/VR pipelines to import single image scans into virtual rooms, mixed reality scenes and immersive storytelling, enhancing content creation capabilities for XR applications.

Efficient input: Reduces the complexity of data collection, can process old photos, user-generated content, and single-product photos, making full use of existing resources.

Benchmark evaluation: Contains clear evaluation suites through which teams can measure model performance, identify domain gaps, and make fine-tuning if necessary to ensure the accuracy and stability of the model in different application scenarios.

Usage tutorial:

1. Capture and Cue: Use a well-lit RGB image, optionally using SAM 3’s text or box prompts to isolate the target object.

2. Reconstruction: Run inference using published checkpoints and code, and Sam3D will directly predict geometry, textures, and layout.

3. Export and deploy: Export meshes and textures and place them into AR viewers, 3D engines, robot simulators, or marketing experiences.

4. Check for optimal results: ensure images are clear, lighting is balanced, occlusions are minimal, use simple backgrounds to improve mask quality and geometric accuracy; use SAM 3 prompts to isolate objects of interest; benchmark on your own data, fine-tune for specific domains; measure latency and cost of interactive AR/VR scenes.