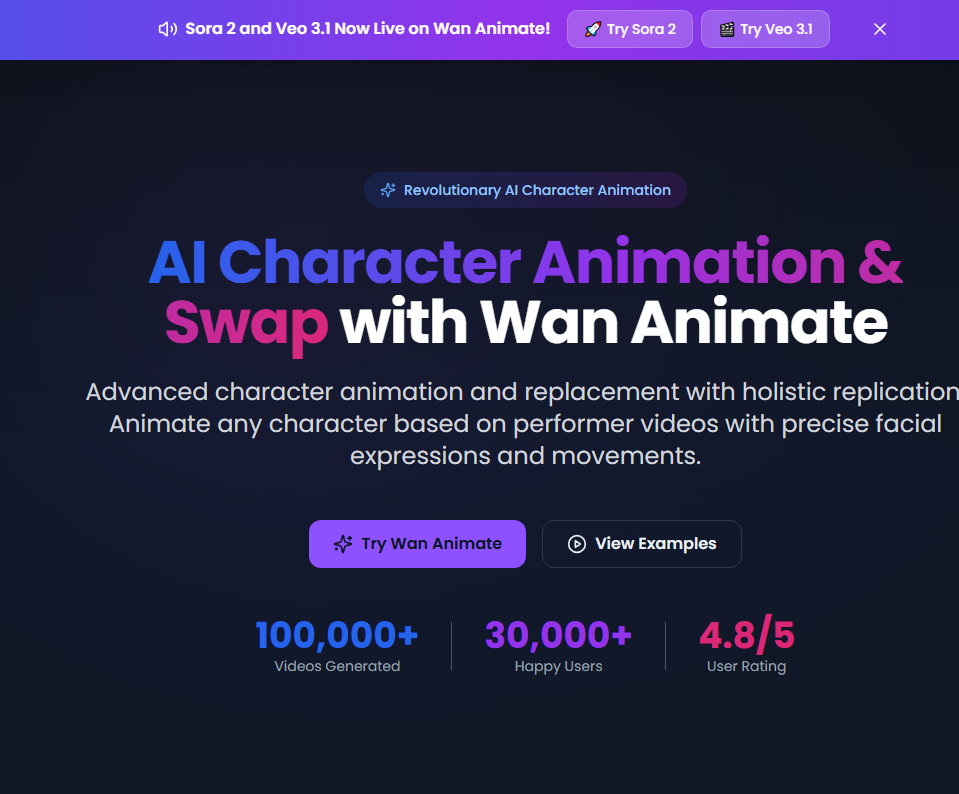

Wan Animate is a revolutionary AI character animation tool based on cutting-edge Wan 2.2 technology, using Mixture of Experts architecture, with high-compression video generation capabilities and advanced performance. The product features powerful character animation and replacement capabilities for highly realistic effects. Its unified framework can handle animation and replacement tasks simultaneously, with a high degree of flexibility and controllability. In terms of price, 480p video generation costs 1 credit per second (minimum 5 credits), and 720p video generation costs 2 credits per second (minimum 10 credits). Product positioning is to provide high-quality character animation and video processing solutions for users in different industries such as content creators, film and television studios, and game developers.

Demand group:

["Content creators and YouTubers: Wan Animate 's Move mode can give their characters realistic expressions and movements, making the characters more vivid in videos, tutorials and entertainment content, improving the appeal and quality of the content.", "Movie and animation studios: Its Mix mode can seamlessly replace actors in existing footage while maintaining environmental consistency, and can also create expressive character animations for pre-production visualization, improving production efficiency and quality.", "Game developers and VR/AR studios: The product's precise motion replication and character consistency functions are suitable for prototyping character behaviors and creating dynamic game content, adding more fun and realism to games and immersive experiences.", "Marketing and advertising agencies: You can use the character replacement function to replace spokespersons in existing videos, animate mascots and brand characters, or create personalized marketing content to enhance brand image and marketing effectiveness.", "Educators and training professionals: Be able to create animations of historical figures, scientific concepts or training scenarios, and produce interactive learning materials and training videos to make educational content more interesting and improve learning outcomes."]

Example of usage scenario:

Content creators use the product to animate characters in their videos to make them more vivid and engage more viewers.

In pre-production, film studios use this product to create character animations for visual display and save production costs.

Marketing agencies use the role replacement function to create personalized video content for brand advertisements to enhance brand influence.

Product features:

Advanced character animation function: With the help of advanced AI technology, any character can be accurately animated based on the performer's video, accurately replicating facial expressions and movements, and generating highly realistic character videos, making the character seem to really exist in the video.

Efficient character replacement function: It can replace the characters in the video with animated characters while retaining the expressions and movements of the original characters. It can also accurately copy the original lighting and tone, allowing the replaced characters to perfectly integrate with the original video environment.

Unified framework system: A unified framework is used to handle animation and replacement tasks, using universal symbolic representation, which provides users with great flexibility and control, allowing users to complete complex tasks more easily.

Advanced motion control: Use spatially aligned skeletal signals to control body movement, and use implicit facial features to replay expressions. It is highly controllable and can meet the user's precise requirements for character movements and expressions.

Environment fusion technology: Through Relighting LoRA technology, while retaining the appearance of the character, appropriate environmental lighting and tones are applied to achieve seamless integration of the character and the environment, making the video effect more natural.

Supports multiple file formats: It accepts character images and reference performer videos in various formats to facilitate users to provide materials. The system will automatically extract motion and expression data to prepare for subsequent processing.

Provide sample videos: Provide users with some sample videos so that users can intuitively understand the functions and effects of the product, and provide inspiration and reference for users' creation.

Usage tutorial:

1. Input character and video material: Provide character images and reference performer videos, support multiple formats, and the system will automatically extract movement and expression data.

2. Perform AI processing: Wan 2.2’s Mix of Experts (MoE) architecture processes skeletal signals and facial features to ensure a high degree of controllability and realistic effects.

3. Generation and export: After completing the processing, you can obtain a high-fidelity character video with precise expressions and movements, which can be exported for use.