Xiaomi MiMo is the first open source inference model of Xiaomi. It is specially designed for reasoning tasks and has excellent mathematical reasoning and code generation capabilities. The model performed well in the public evaluation sets of Mathematical Inference (AIME 24-25) and Code Competition (LiveCodeBench v5). With just 7B parameter scale, it surpassed larger models such as OpenAI's o1-mini and Alibaba Qwen's QwQ-32B-Preview. MiMo has significantly improved its reasoning capabilities through multi-level innovations in the pre-training and post-training stages, including data mining, training strategies and reinforcement learning algorithms. The open source of this model provides researchers and developers with powerful tools to drive the further development of artificial intelligence in the field of reasoning.

Demand population:

" Xiaomi MiMo is suitable for researchers, developers and enterprises that require efficient reasoning. Its powerful mathematical reasoning and code generation capabilities make it have a wide range of application prospects in academic research, software development, data analysis and education. For researchers, MiMo provides powerful reasoning tools that help drive research on artificial intelligence in the field of reasoning. For developers, MiMo can be integrated into various applications to improve the intelligence level of applications. For enterprises, MiMo can be used to optimize business processes and improve decision-making efficiency."

Example of usage scenarios:

Researchers can use MiMo to conduct complex mathematical inference research to improve the performance of models on mathematical problems.

Developers can integrate MiMo into a code editor to provide programmers with real-time code suggestions and optimization solutions.

Enterprises can use MiMo’s inference capabilities to optimize business processes, such as risk assessment and forecasting in the financial field.

Product Features:

In the pre-training stage, we focus on mining rich inference corpus and synthesize about 200B tokens inference data to ensure that the model has seen more inference patterns.

Carry out three-stage training to gradually increase the training difficulty, with the total training volume reaching 25T tokens, and comprehensively improve the model's reasoning ability.

In the post-training stage, the Test Difficulty Driven Reward strategy is proposed to alleviate the reward sparse problem in difficult algorithm problems, and the Easy Data Re-Sampling strategy is introduced to stabilize RL training.

Design the Seamless Rollout system to accelerate RL training and verification, improving efficiency by 2.29 times and 1.96 times respectively.

MiMo-7B's performance is significantly ahead of other models of the same scale in the mathematical reasoning and code competition public assessment set.

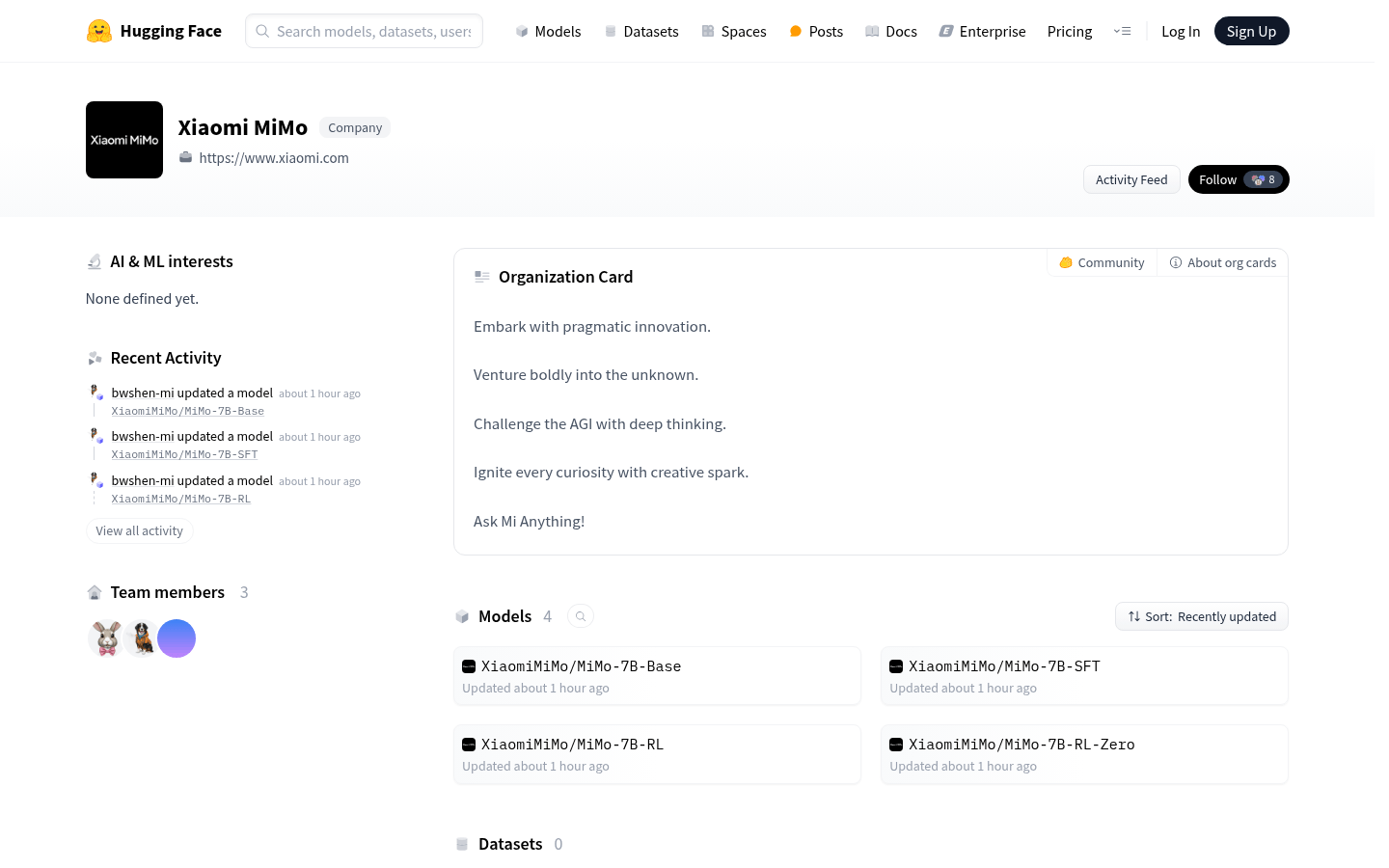

The entire MiMo-7B series is open source and provides 4 models to HuggingFace for easy use by researchers and developers.

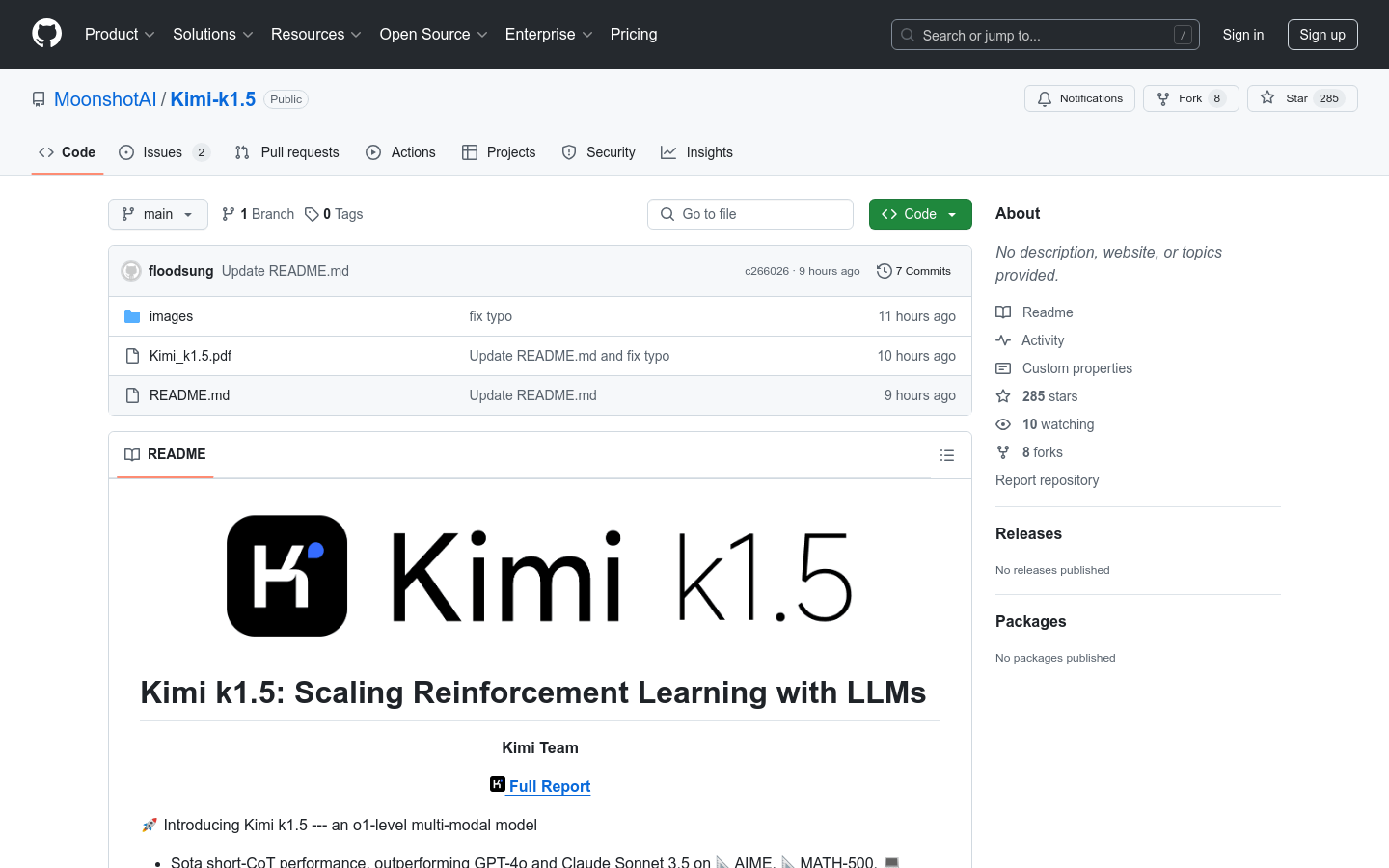

Technical details have been published on GitHub, including complete training reports and technical documents to facilitate community communication and further research.

The MiMo model was developed by Xiaomi's big model Core team, demonstrating Xiaomi's innovative capabilities and technical strength in the field of artificial intelligence.

Tutorials for use:

Visit the MiMo model page on HuggingFace:

Download and install the required version of MiMo model.

Use the API or tool provided by HuggingFace to load the model and perform inference tasks.

The model is fine-tuned as needed to suit a specific inference task or dataset.

Use MiMo's technical reports and documentation to gain insight into the training details and usage techniques of the model.