What is EXAONE-3.5-24B-Instruct-GGUF?

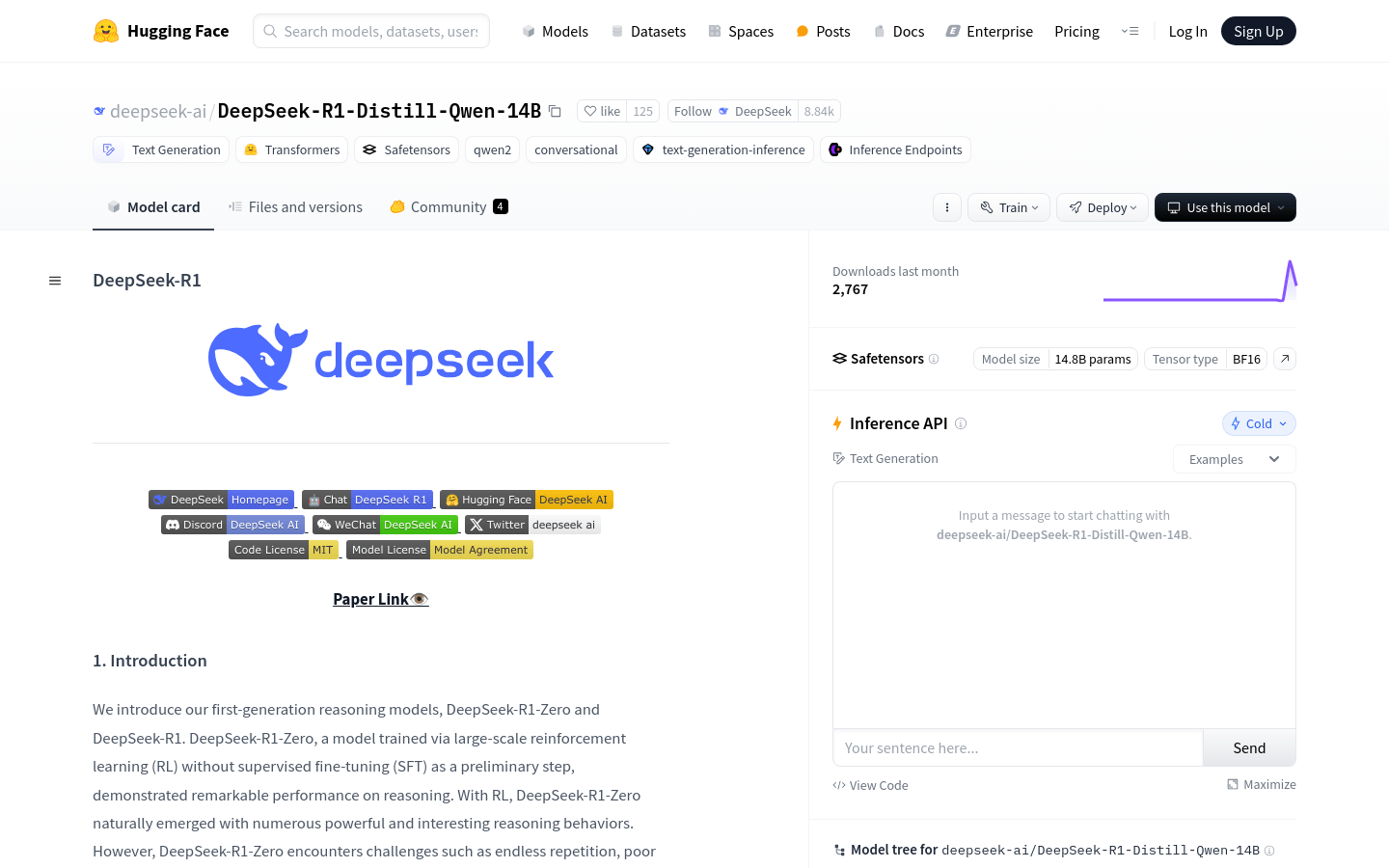

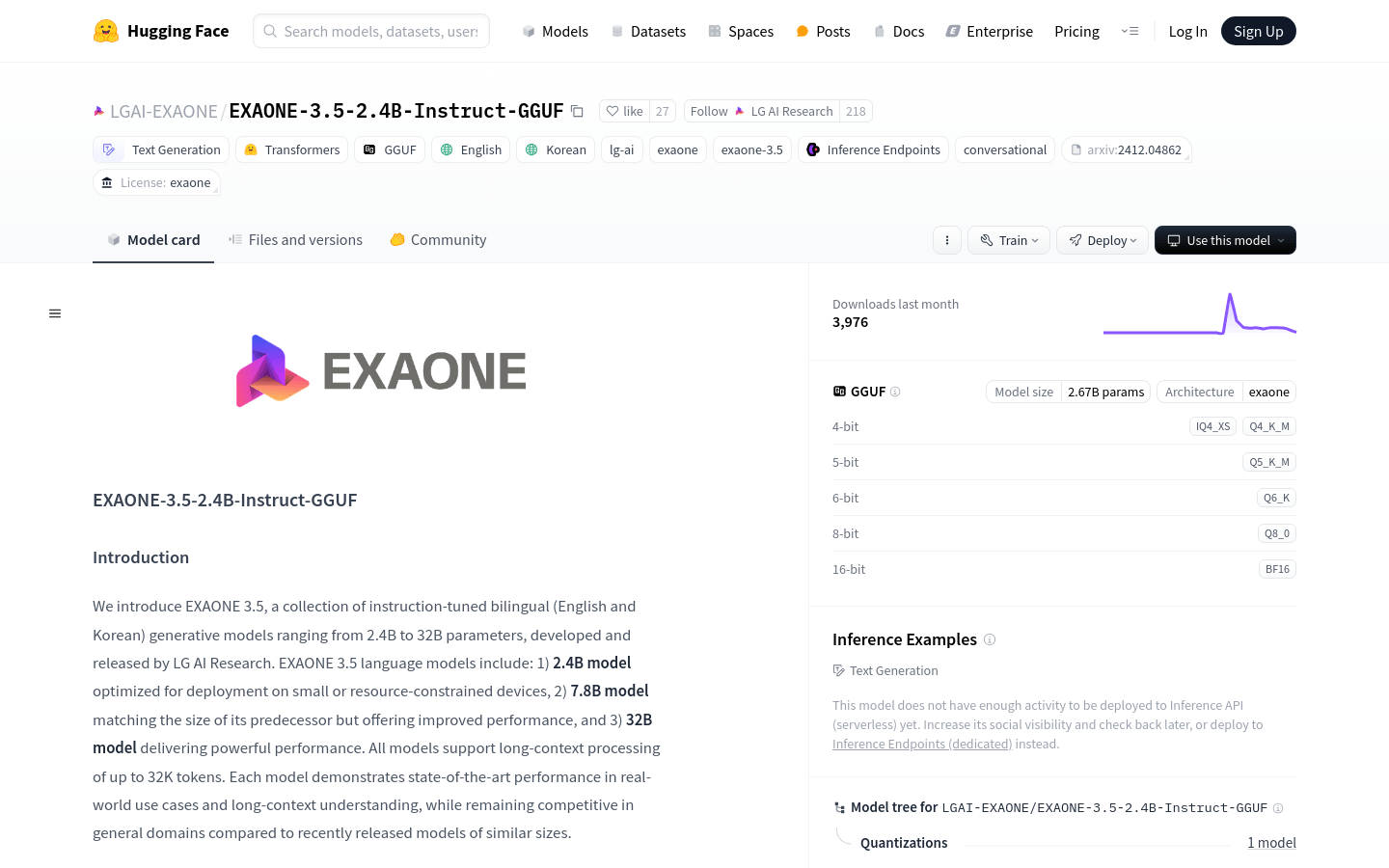

EXAONE-3.5-24B-Instruct-GGUF is a series of bilingual (English and Korean) instruction-tuned generative models developed by LG AI Research. These models have parameter sizes ranging from 2.4 billion to 32 billion parameters. They support long context processing up to 32K tokens and demonstrate state-of-the-art performance in real-world use cases and long-context understanding while maintaining competitive performance in general domains compared to recently released similar-sized models. The model is optimized for deployment on small or resource-constrained devices, providing strong performance.

Who can benefit from using EXAONE-3.5-24B-Instruct-GGUF?

This model is ideal for researchers and developers looking to deploy high-performance language models on resource-limited devices, as well as application developers needing to handle long text and multilingual text generation. Its optimization for deployment and robust performance, combined with support for long-context understanding and multilingual capabilities, makes it particularly useful.

In what scenarios can EXAONE-3.5-24B-Instruct-GGUF be used?

Researchers can utilize the EXAONE-3.5-24B-Instruct-GGUF model for semantic understanding research involving long texts.

Developers can implement real-time multilingual translation features on mobile devices using this model.

Businesses can enhance their customer service automatic response systems, improving efficiency and accuracy with this model.

What are the key features of EXAONE-3.5-24B-Instruct-GGUF?

Supports long context processing of up to 32K tokens.

Available in three different scales: 2.4 billion, 7.8 billion, and 32 billion parameters, suitable for various deployment needs.

Demonstrates leading-edge performance in real-world applications.

Supports bilingual (English and Korean) text generation.

Optimized for better instruction understanding and execution.

Offers multiple quantized versions to fit different computational and storage requirements.

Can be deployed across various frameworks including TensorRT-LLM, vLLM, and SGLang.

How do you use EXAONE-3.5-24B-Instruct-GGUF?

Install llama.cpp, following the installation guide provided in the GitHub repository for llama.cpp.

Download the GGUF format file of the EXAONE 3.5 model.

Use the huggingface-cli tool to download the specific model files to your local directory.

Run the model using the llama-cli tool and set system prompts such as 'You are the EXAONE model from LG AI Research, a helpful assistant.'

Select an appropriate quantized version of the model for deployment and inference based on your needs.

Deploy the model into supported frameworks like TensorRT-LLM or vLLM for practical applications.

Monitor the generated text to ensure compliance with LG AI’s ethical guidelines.

For further optimization and performance enhancement, refer to technical reports, blogs, and instructions available on GitHub.