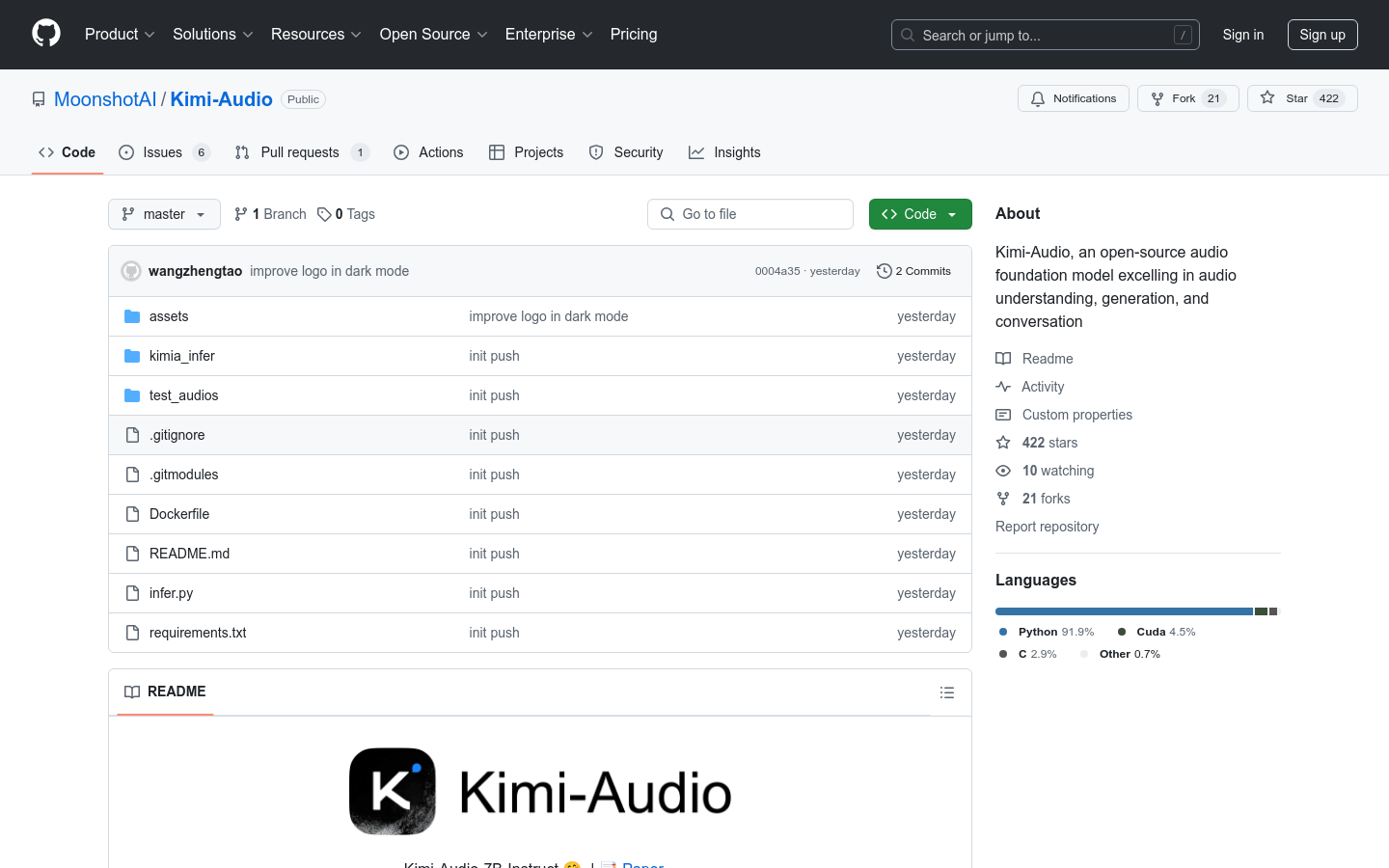

Kimi-Audio is an advanced open source audio fundamental model designed to handle a variety of audio processing tasks such as speech recognition and audio conversations. The model is pre-trained at scale on more than 13 million hours of diverse audio and text data, with powerful audio inference and language comprehension. Its main advantages include excellent performance and flexibility, suitable for researchers and developers to conduct audio-related research and development.

Demand population:

" Kimi-Audio is suitable for researchers, audio engineers and developers who need a powerful and flexible audio processing tool that can support a variety of audio analysis and generation tasks. The open source nature of the model allows users to customize and expand according to their needs, and is suitable for audio-related scientific research and commercial applications."

Example of usage scenarios:

Integrate Kimi-Audio in the voice assistant to improve its understanding of user voice commands.

Use Kimi-Audio to automatically transcribe audio content, providing subtitles for podcasts and video content.

Through Kimi-Audio it realizes audio-based emotional recognition and enhances the user interaction experience.

Product Features:

Various audio processing capabilities: support for voice recognition, audio Q&A, audio subtitle generation and other tasks.

Excellent performance: SOTA results were achieved on multiple audio benchmarks.

Large-scale pre-training: train on multiple types of audio and text data to enhance the understanding of the model.

Innovative architecture: Using hybrid audio input and LLM core, it can process text and audio input simultaneously.

Efficient reasoning: With a block-level stream decoder based on stream matching, supporting low-latency audio generation.

Open Source Community Support: Provides code, model checkpoints and a comprehensive evaluation toolkit to promote community research and development.

User-friendly interface: simplifies the use of the model and makes it easier for users to get started.

Flexible parameter settings: allows users to adjust the generation parameters of audio and text according to their needs.

Tutorials for use:

1. Download Kimi-Audio models and code from the GitHub page.

2. Install the required dependency library to ensure that the environment is set up correctly.

3. Load the model and set the sampling parameters.

4. Prepare audio input or dialogue information.

5. Call the model's generation interface and pass in prepared messages and parameters.

6. Process model output and obtain text or audio results.

7. Adjust parameters as needed and optimize model performance.