nanochat is a full-stack implementation of a large language model similar to ChatGPT, aiming to provide users with a customizable chat experience at low cost. The project is designed to run on a single 8XH100 node, supporting training and inference from start to finish, at a total cost of only about $100. Not only is it easy to deploy, but it also aims to reduce the complexity of AI models, making them easier to use and understand.

Demand group:

"This product is suitable for developers and researchers interested in artificial intelligence and natural language processing, especially those who want to explore large language models on a limited budget. Its simplicity and customizability make it ideal for learning and experimentation."

Example of usage scenario:

Educational institutions can use nanochat to build educational aids and improve the learning experience.

Small startups use nanochat to create customer service chatbots to save labor costs.

Developers use nanochat for research and experimentation, exploring the potential and applications of language models.

Product features:

Supports full-stack training: covering the complete process from data labeling to inference.

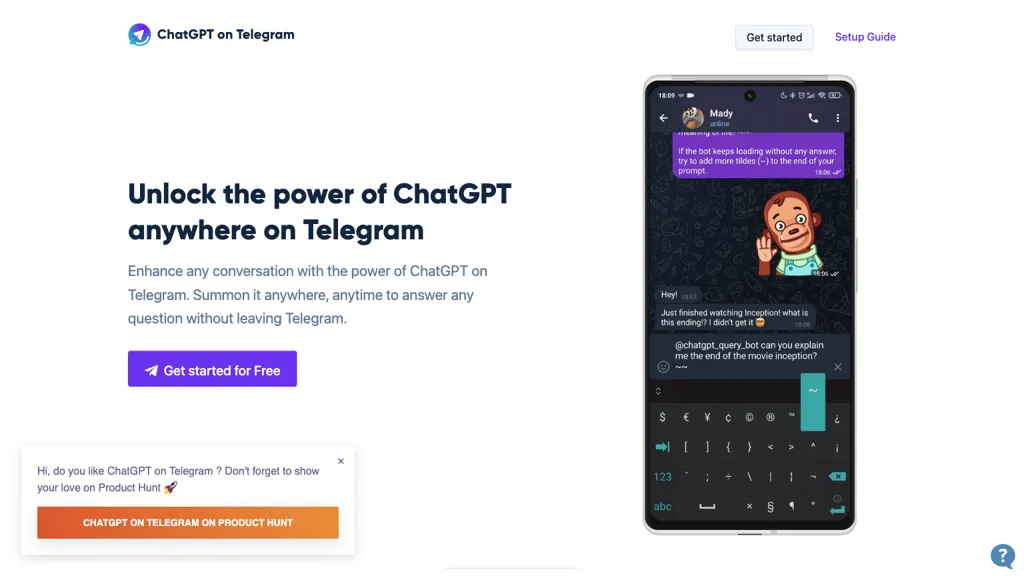

Provides a simple user interface: users can interact with their models just like chatting with ChatGPT.

Low cost: Implement a fully functional LLM within a reasonable budget.

Quick start: Training can be completed in about 4 hours using the speedrun.sh script.

Scalability: Supports training of larger models to improve performance.

Easy to modify and customize: The code structure is simple, making it easy for developers to carry out secondary development and expansion.

Reporting capabilities: Generate detailed operational reports including performance assessments and metrics.

Supports multiple computing environments: compatible with multiple GPU platforms and has good flexibility.

Usage tutorial:

Prepare an 8XH100 GPU node and make sure all necessary dependencies are installed.

Download and clone the nanochat project locally.

Activate the Python virtual environment and ensure that dependent packages are working properly.

Run the speedrun.sh script to start the training process.

After training is complete, use the provided command to launch the chat interface.

Visit the provided URL to interact with the trained model.

Adjust hyperparameters as needed to improve model performance.