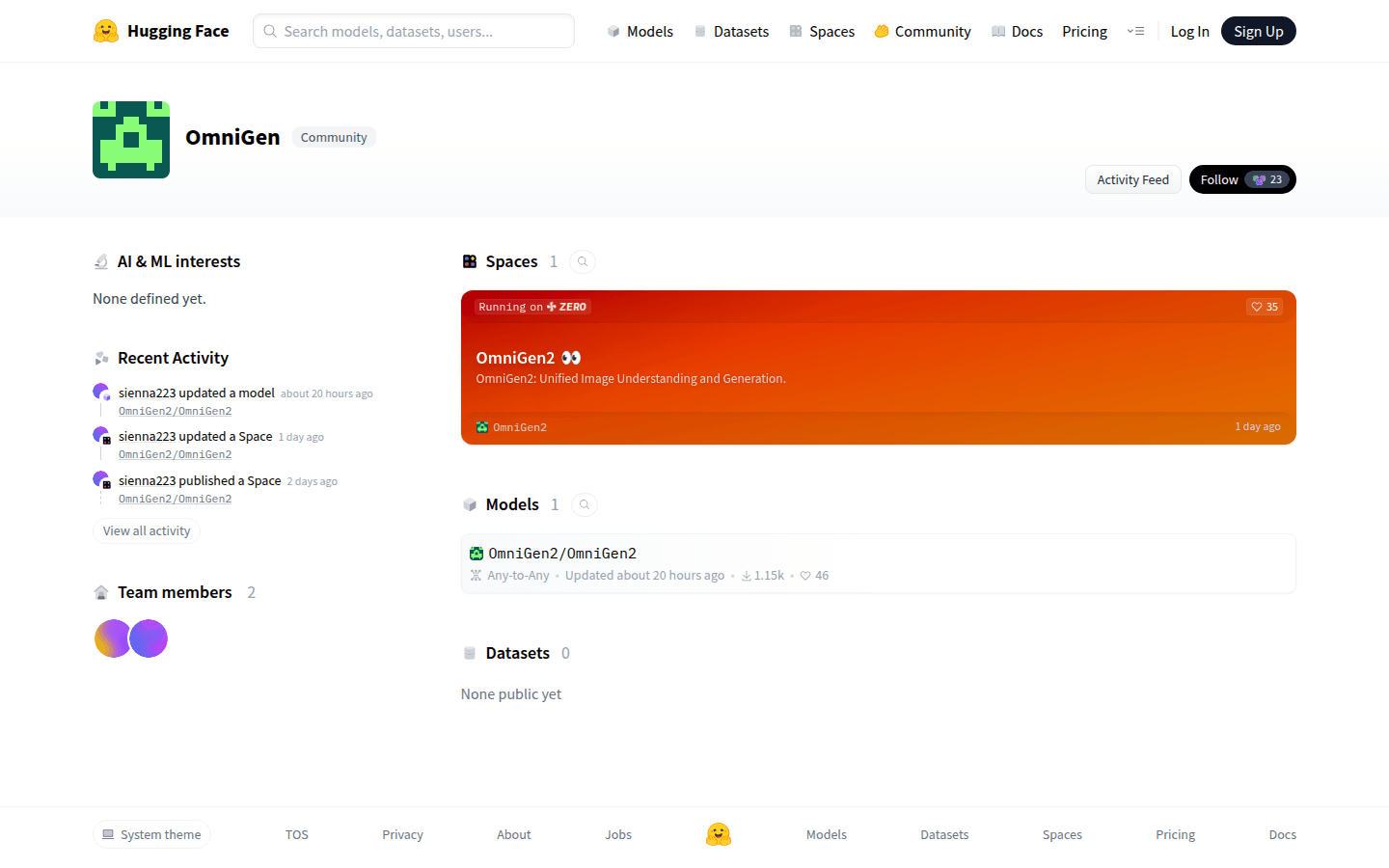

OmniGen2 is an efficient multimodal generation model, combining visual language model and diffusion model, which can realize visual understanding, image generation and editing functions. Its open source features provide researchers and developers with a strong foundation to explore personalized and controllable AI generation.

Demand population:

"The product is suitable for researchers, developers and designers who need efficient tools to generate and edit images, supporting personalized customization and innovative design."

Example of usage scenarios:

Generate corresponding images based on the user-provided text description.

Use instructions to modify existing images to meet the needs in your design work.

Combining multiple input data to generate rich visual content for publicity or educational materials.

Product Features:

Visual understanding: Strong image content analysis ability.

Text-to-image generation: Generate high-quality images based on text prompts.

Instructions-guided image editing: perform complex image modifications with high precision.

Context generation: Process and combine different inputs to produce novel visual output.

Supports multiple input formats and is flexibly applied to different scenarios.

Provides a friendly user interface and online demonstration platform.

Open source code and data sets for easy research and development.

Tutorials for use:

Cloning code base: git clone [email protected]:VectorSpaceLab/ OmniGen2

Create and activate Python environment: conda create -n OmniGen2 python=3.11, conda activate OmniGen2

Install PyTorch and other dependencies: pip install torch==2.6.0 torchvision, pip install -r requirements.txt

Running example: bash example_t2i.sh performs text-to-image generation.

Visit online demonstrations or run local applications for image generation and editing.