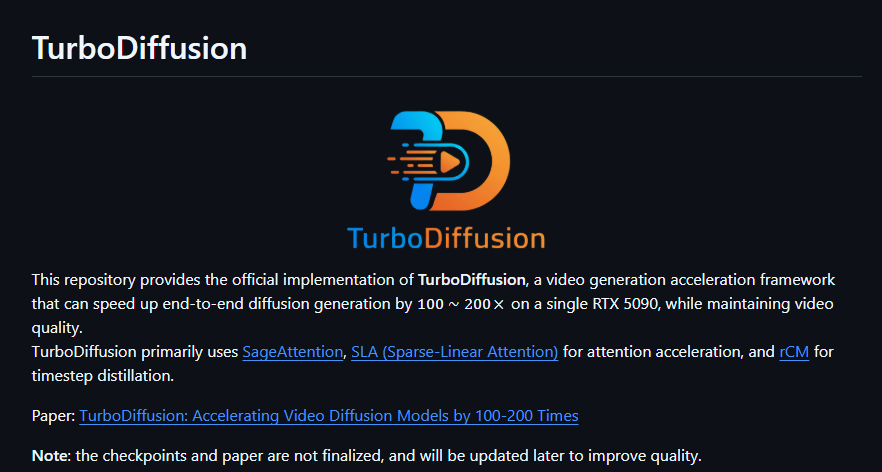

TurboDiffusion is a video generation acceleration framework that accelerates end-to-end diffusion generation by 100 to 200 times on a single RTX 5090 while maintaining video quality. Its main technologies include SageAttention, Sparse Linear Attention (SLA) and Time Step Refinement (rCM), which are suitable for application scenarios with high requirements for real-time video generation, and are especially suitable for research and development teams.

Demand group:

" TurboDiffusion is suitable for researchers, developers and creative workers who want to improve the speed and quality of video generation. Its technical background and efficient performance make it an important tool in the field of video generation, especially in applications that require extremely high generation quality and efficiency."

Example of usage scenario:

Use TurboDiffusion to generate a 5-second stylized urban street video.

Generate dynamic videos of cats based on images to show their lively movements.

Quickly generate high-quality advertising video clips for easy marketing use.

Product features:

Attention acceleration based on SageAttention

Supports 480p and 720p video generation

Supports image-to-video (I2V) and text-to-video (T2V) generation

Fast inference time, significantly improving generation efficiency

Flexible parameter settings support diverse generation needs

Integrate modern deep learning models to provide high-quality video output

Supports quantified checkpointing to optimize memory usage

Usage tutorial:

Install the dependent environment and ensure that the Python and PyTorch versions meet the requirements.

Install TurboDiffusion via pip, or compile from source.

Download the required model checkpoints and VAE and text encoders.

Use an inference script to specify the model, generation parameters, and output path.

Execute the inference command to generate the video and save it to the specified path.