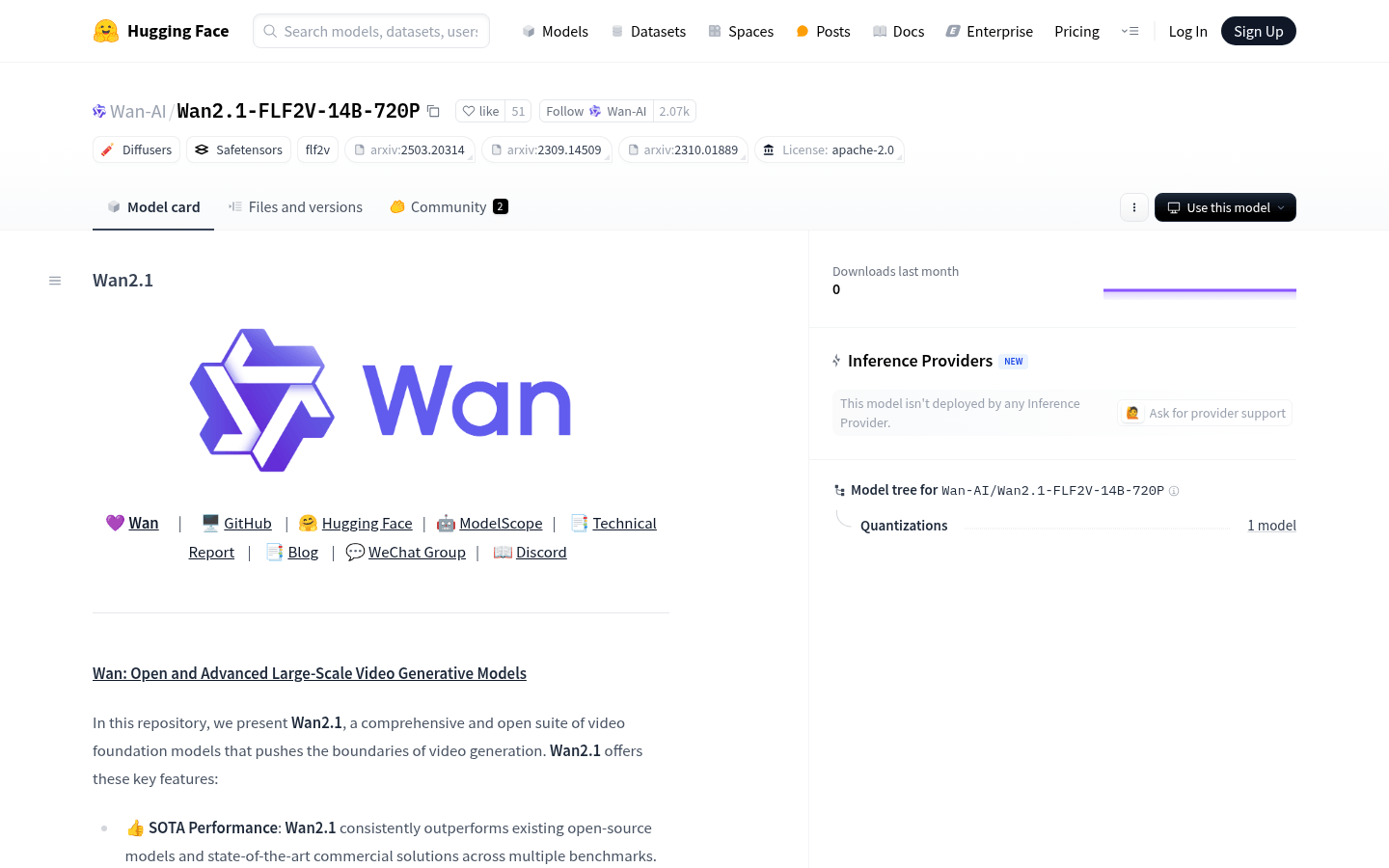

Wan2.1-FLF2V-14B is an open source large-scale video generation model designed to promote advancement in the field of video generation. The model performs well in multiple benchmarks, supports consumer-grade GPUs, and is able to efficiently generate 480P and 720P videos. It performs well in multiple tasks such as text to video, image to video, and has strong visual text generation capabilities, and is suitable for various practical application scenarios.

Demand population:

"This product is suitable for video creators, developers and researchers, especially those who need to generate high-quality video content. Its powerful features and compatibility make it widely used in many industries such as education, entertainment, and advertising."

Example of usage scenarios:

Use Wan2.1 to generate short videos for social media content creation.

Convert images into videos for advertising and marketing video production.

Develop new applications and use video generation functions to enhance user experience.

Product Features:

Go beyond existing models to deliver the latest SOTA performance.

Supports running on consumer GPUs, with good compatibility.

Able to handle various tasks such as text to video, image to video, etc.

Supports Chinese and English text generation to improve the flexibility of practical applications.

Efficient encoding and decoding are achieved through Wan-VAE, maintaining time information.

Integrate into a variety of tools and platforms for easy use and integration.

Tutorials for use:

Cloning model library: git clone https://github.com/Wan-Video/Wan2.1.git

Install dependencies: pip install -r requirements.txt

Download model weights: Use huggingface-cli or modelscope-cli to download the model.

Run text to video generation: Use the generation command and specify parameters and prompts.

Adjust model parameters and generation options as needed to optimize video quality.