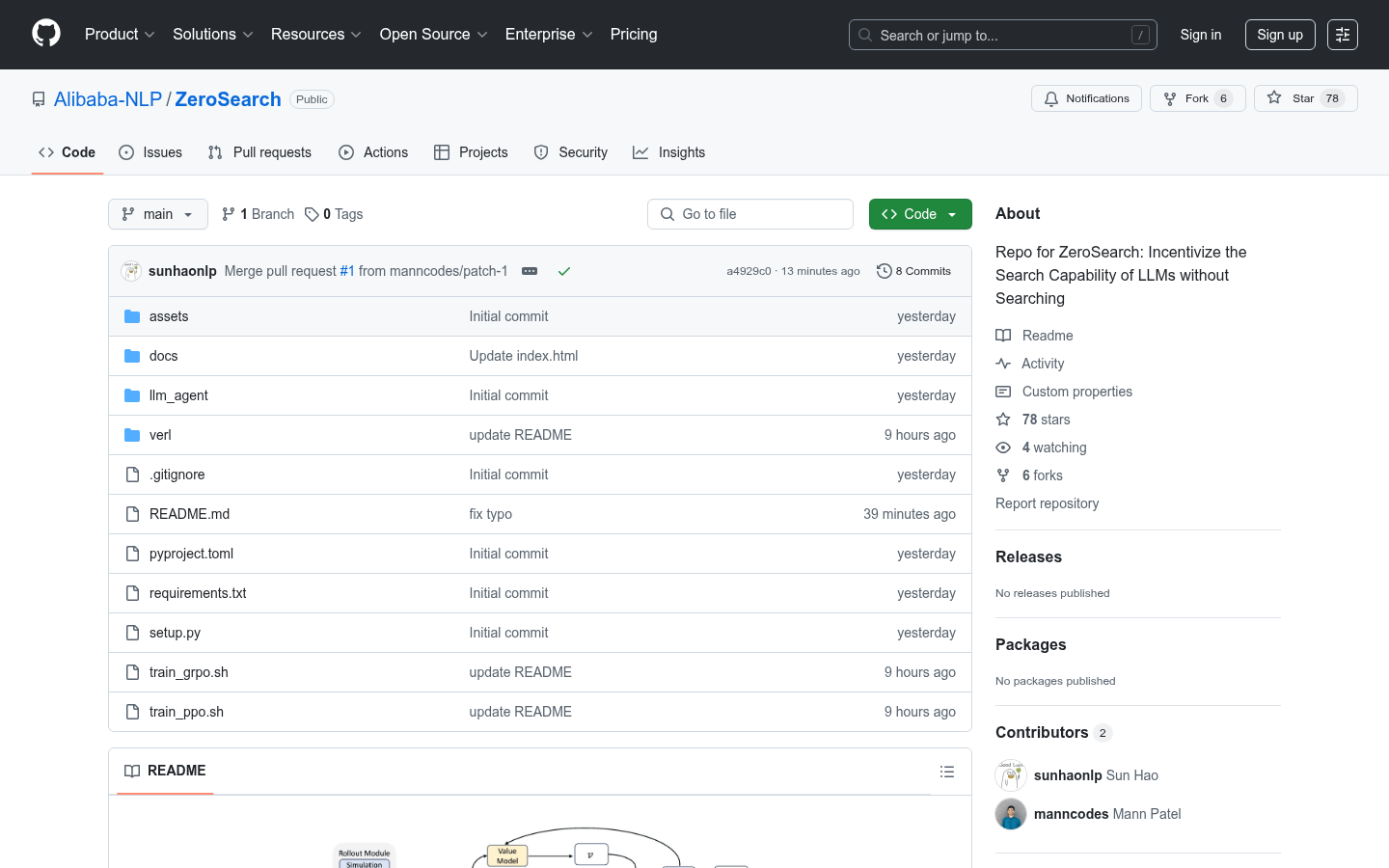

ZeroSearch is a novel reinforcement learning framework designed to inspire search capabilities of large language models (LLMs) without interacting with actual search engines. Through supervised fine-tuning, ZeroSearch transforms LLM into a search module that can generate relevant and irrelevant documents, and introduces a course rollout mechanism to gradually stimulate the model's inference ability. The main advantage of this technology is that its performance is better than a model based on real-life search engines, while generating zero API costs. It is suitable for LLMs of all sizes and supports different reinforcement learning algorithms, suitable for research and development teams that require efficient retrieval capabilities.

Demand population:

"This product is especially suitable for researchers and developers who need an efficient search solution to improve the performance of large language models, especially when budgets are limited, ZeroSearch provides a viable alternative."

Example of usage scenarios:

In the field of education, ZeroSearch helps teachers and students quickly retrieve relevant academic literature.

In a business environment, companies can use ZeroSearch to conduct market research to obtain relevant data without incurring high search fees.

In software development, development teams are able to improve the efficiency of their code and document retrieval through ZeroSearch .

Product Features:

Optimize search capabilities through reinforcement learning

Support supervision and fine-tuning to improve model effect

No real search engine interaction required, reducing costs

Adapt to LLM of multiple sizes

Course launch mechanism to improve model reasoning capabilities

Wide range of application scenarios and good versatility

Tutorials for use:

Create a Conda environment and install the dependency package.

Download the training dataset and simulate LLM.

Start the local emulation server.

Set up the Google Search API key.

Run the training script for reinforcement learning training.