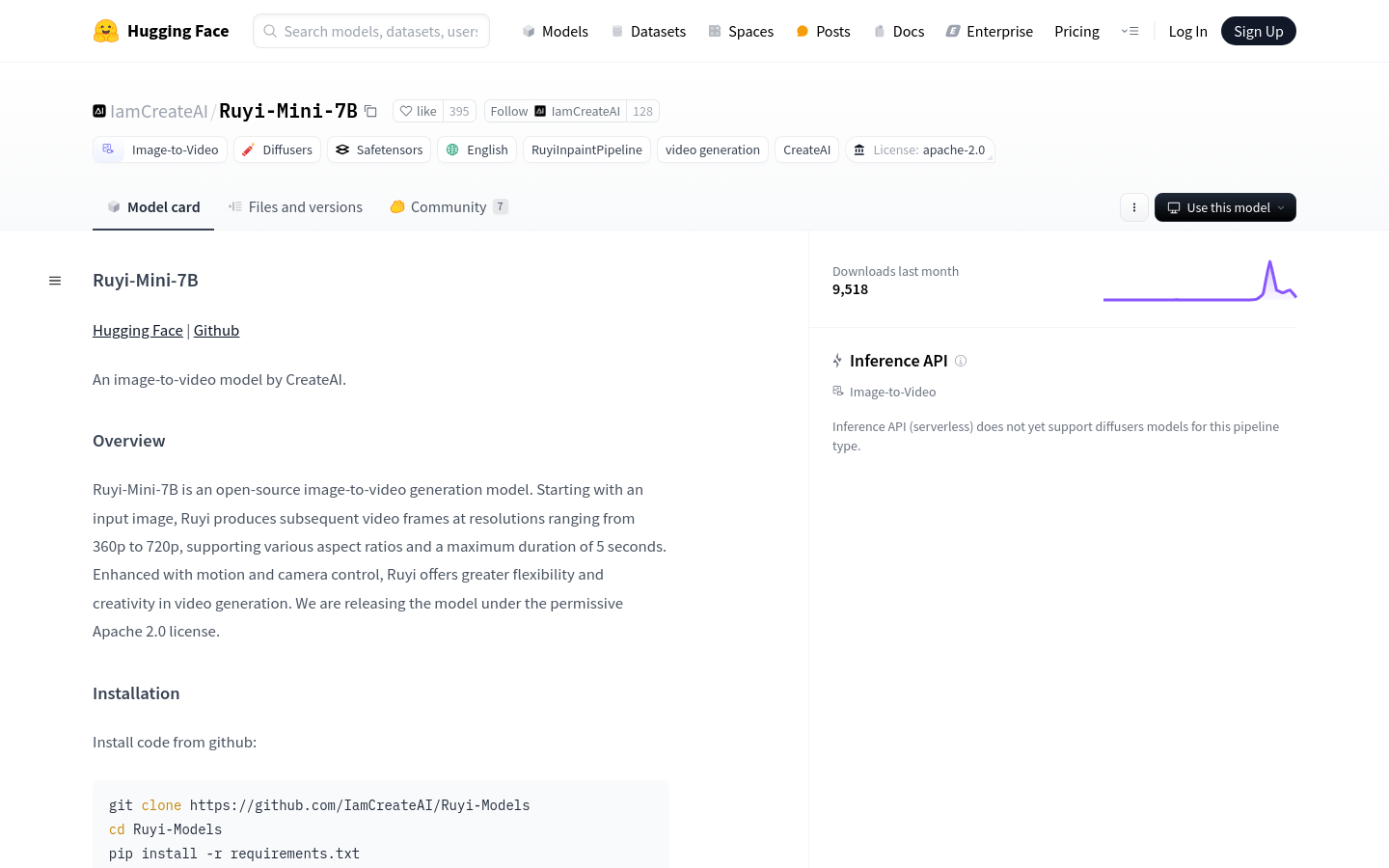

Ruyi-Mini-7B is an open source image-to-video generation model developed by the CreateAI team. It has about 7.1 billion parameters and is able to generate video frames with a resolution of 360p to 720p from the input image for up to 5 seconds. The model supports different aspect ratios and enhances motion and camera control capabilities, providing greater flexibility and creativity. The model is released under the Apache 2.0 license, meaning users can use and modify it freely.

Demand population:

"The target audience includes video maker, animators, game developers and researchers. Ruyi-Mini-7B is suitable for them because it provides an innovative way to generate dynamic video content from static images, which can be used to create animations, game backgrounds, ads and other multimedia content."

Example of usage scenarios:

- Video maker uses Ruyi-Mini-7B to generate animated backgrounds from static images.

- Game developers use models to create dynamic backgrounds for game characters.

- Advertising maker uses models to generate attractive advertising video content.

Product Features:

- Video compression and decompression: Casual VAE module, reduces spatial resolution to 1/8 and temporal resolution to 1/4.

- 3D full attention video data generation: Diffusion Transformer module, using 2D Normalized-RoPE to process spatial dimensions, Sin-cos position embed processing time dimensions, DDPM model training.

- Semantic feature extraction: Use the CLIP model to extract semantic features from the input image to guide the entire video generation process.

- Multi-resolution support: The model is able to handle video generation from 360p to 720p resolution.

- Motion and camera control: Enhance the flexibility and creativity of video generation.

- Open Source License: Apache 2.0 License, users can freely use and modify models.

- Efficient video generation: The model can quickly generate video content up to 5 seconds.

Tutorials for use:

1.Clone the Ruyi-Models code base from GitHub.

2. Enter the Ruyi-Models directory.

3. Use pip to install the dependencies listed in requirements.txt.

4. Use python3 predict_i2v.py to run the model.

5. Or run the model using the ComfyUI wrapper in the GitHub repository.

6. Enter the image and wait for the model to generate a video.

7. Adjust motion and camera control parameters as needed to optimize video effects.

8. Export the generated video content.